TLSeminar

Testing and Verification of TLS

Introduction

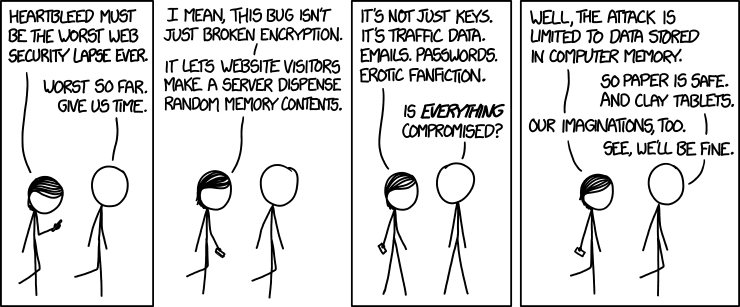

TLS/SSL is the most widely adopted protocol for securing online communication. However, as we have seen in the past few weeks, creative attackers have found it riddled with exploitable weaknesses. Rather than just reacting to attacks as they are discovered, many projects instead proactively seek out potential security flaws to implement remedies before vulnerabilities are exploited. This is done primarily by testing and verification, the topic of this week’s blog post.

First, we will examine some motivating attacks on TLS implementations, including the Heartbleed attack, CRIME attack, and the infamous “goto fail”, as well as their solutions. Next, we will discuss differential testing, a technique that creates well-formed but forged certificates, called “frankencerts”, and uses them to compare the responses of many popular implementations such as OpenSSL - and how they strengthened their defenses afterward (or didn’t). Finally, we introduce verification, which takes advantage of the relationship between computer systems and mathematics to rigorously prove properties about programs, either by type-checking existing programs or building a program from scratch starting with abstract refinement types.

Heartbleed

Heartbleed by Codenomicon (2014)

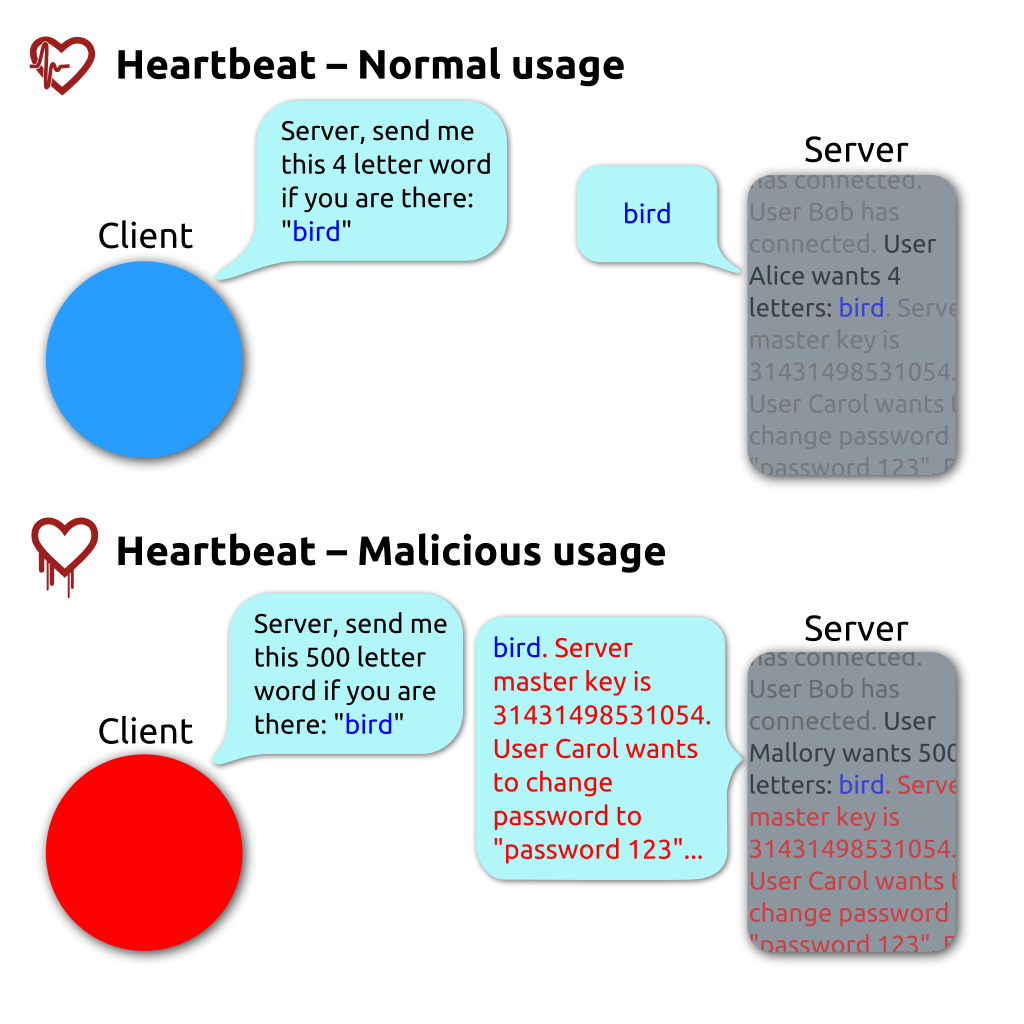

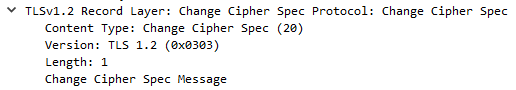

OpenSSL’s heartbleed bug exploits heartbeat requests between a client and a server. In the OpenSSL implementation, the client does not check the actual lengths of the message but trusts the length field in the request message. If the real message is shorter than the length specified in the length field, then the payload returned in the response heartbleed can also contain what happened to be in the allocated memory buffer. In this manner, secret keys, login credentials, sensitive data, and miscellaneous memory are all at risk of exposure.

Source: Wikipedia

Compression Ratio Info-Leak Mass Exploitation (CRIME)

The CRIME Attack by Juliano Rizzo and Thai Duong (2013)

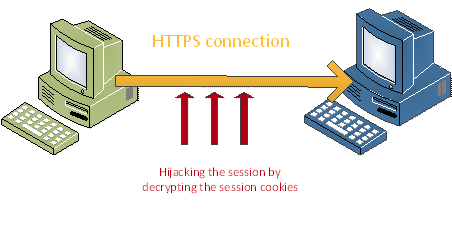

CRIME attacks were developed by Juliano Rizzo and Thai Duong in 2012 and exploited TLS Compression by injecting plaintext into victim’s web requests and observing the outcome length. Tampering with web requests is made possible by injecting malicious JavaScript code through a network attack. Through many trials, the attacker can steal user cookies and hijack the session.

Source: Infosec Institute

Goto Fail;

Understanding Apple ‘goto fail’ Vulnerability by Amit Sethi (2014)

In February 2014, Apple released a security update on many versions of its operating system that included the following vulnerability in the function SSLVerifySignedServerKeyExchange.

if ((err = SSLHashSHA1.update(&hashCtx, &signedParams)) != 0)

goto fail;

goto fail;

… other checks …

fail:

… buffer frees (cleanups) …

return err;

The indentation on the second goto fail; is misleading. The lack of curly brackets meant that the second goto fail; will always be executed, skipping vital signature checking and accepting both good and bad signatures.

Differential Testing

Using Frankencerts for Automated Adversarial Testing of Certificate Validation by Chad Brubaker, Suman Jana, Baishakhi Ray, Sarfraz Khurshid, and Vitaly Shmatikov (2014)

An empirical study of goto in C code from GitHub repositories by Meiyappan Nagappan, Romain Robbes, Yasutaka Kamei, Éric Tanter, Shane McIntosh, Audris Mockus, and Ahmed E. Hassan (2015)

Differential testing is the process of searching for bugs in software by running multiple programs on the same inputs. If there is a discrepancy between one program’s results on a given input and another’s, it’s likely that one of the implementations is bugged. A differential testing system will flag such results for human review.

Brubaker et al. used this technique to test server authentication for many implementations of SSL/TLS as presented in their 2014 “Frankencerts” paper. The researchers initially had to deal with the dual problems of generating test case certificates and successfully interpreting the results of acceptance and rejection.

Generating certificates proved to be a challenge due to the nature of an SSL certificate. Simply randomly fuzzing valid certificates is unlikely to produce data parsable as a certificate. Manually creating test cases would take too long and potentially miss edge cases that a more statistically comprehensive generation method would find. The researchers decided on creating frankencerts by scanning 243,246 current certificates from the internet and randomly permuting their parts. This process guaranteed that the 8,127,600 frankencerts would be parsable as certificates and resulted in a wide variety of unusual combinations of extensions, key usage constraints, odd CAs, and other possible variations within a cert.

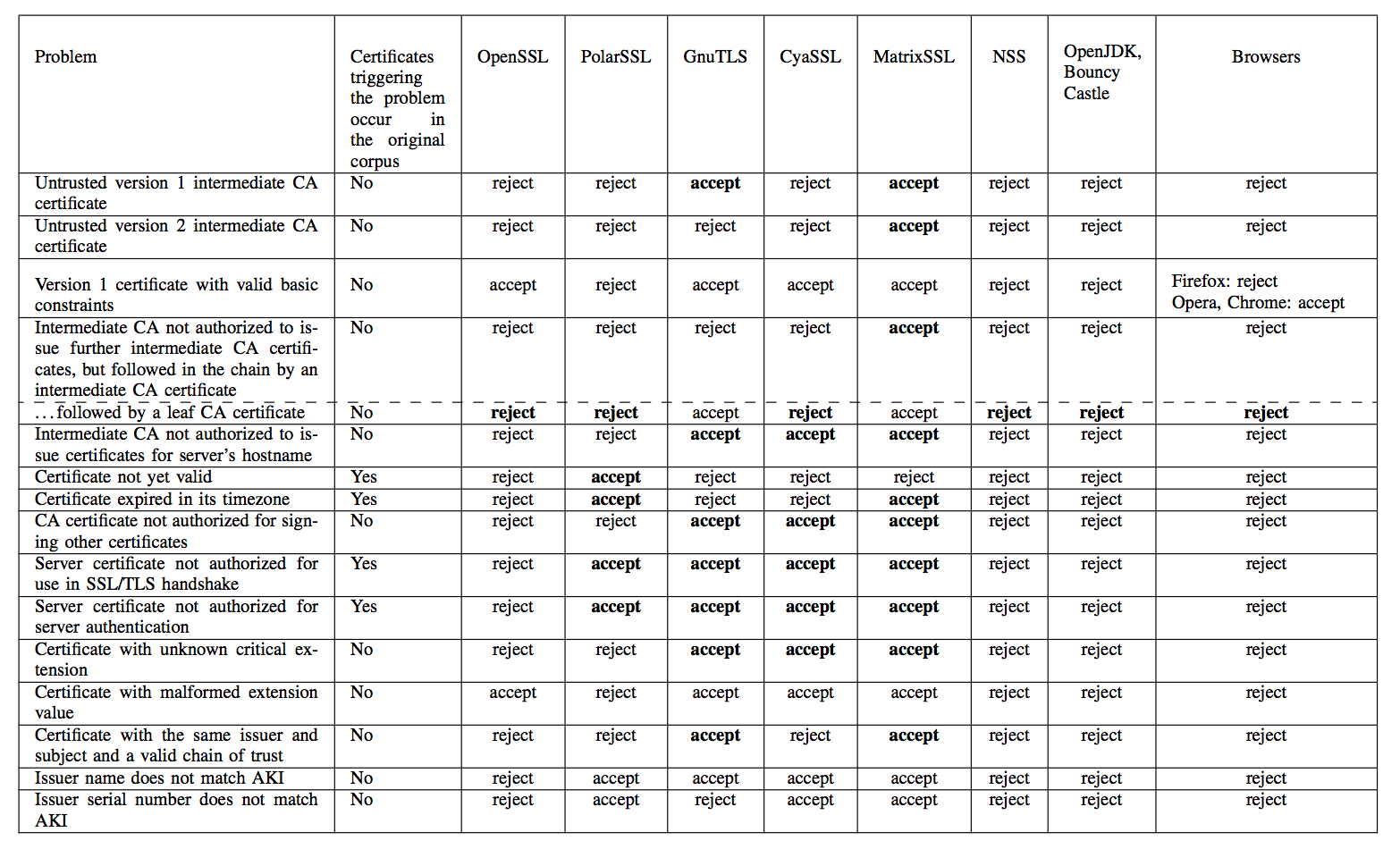

The researchers then ran OpenSSL, NSS, GnuTLS, CyaSSL, PolarSSL, MatrixSSL, OpenJDK, and Bouncy Castle on the frankencerts, looking for discrepancies between results. Because each of these libraries is intended to implement the same X.509 certificate validation logic, any discrepancy in certificate acceptance between them would indicate that SOMEONE was screwing up. Thus the researchers dealt with the second issue, that of interpreting the results of their tests.

Running the differential test on all 8 million frankencerts and the quarter-million real certificates produced 208 discrepancies that, when manually investigated, uncovered many serious flaws in the various implementations (error results are bolded):

Source: Chad Brubaker, Suman Jana, Baishakhi Ray, Sarfraz Khurshid, and Vitaly Shmatikov

Any of the invalid acceptances would allow for an in-the-middle attack to be crafted against the implementation in question. The researchers contacted the developers of the various SSL/TLS implementations affected and reported their results before publishing, allowing the developers time to fix their bugs.

Chen and Su’s mucert builds on the frankencert method. Mucerts are randomly fuzzed test certificates generated from a seed set of certificates. The fuzzing, or mutating, occurs in accordance with a number of guidelines that prevent mutations from generating unparseable certificates. Chen and Su statistically evaluated mucerts and compared them to frankencerts, finding that mucerts attained drastically higher levels of corner case coverage.

Verification

Verifying s2n HMAC with SAW by Joey Dodds (2016)

Implementing TLS with Verified Cryptographic Security by Karthikeyan Bhargavan, Cédric Fournet, Markulf Kohlweiss, Alfredo Pironti,and Pierre-Yves Strub (2013)

Software Foundations by Benjamin C. Pierce, et al. (2017)

Software testing can only assert properties about program behaviors covered in the test suite. There will always be missed edge cases and odd bugs that will show up in code. This is where we turn to formal specifications to ‘prove’ the correctness of our code.

But how do we go about ‘proving’ this correctness? Let’s look at what we’re trying to solve in the first case:

For each behavior X in code, formally prove that X does what it should and nothing else. For those that are familiar with proofs and mathematical abstractions, this is known as a non-existence proof. In formal verification, we must prove that a program satisfies a formal specification of its behavior. By using the methods of mathematics, we can make strong statements regarding the correctness of our cryptographic implementations, for example, TLS.

To get down to formal verification, we must first define the difference between verification and validation. Validation asks questions like Does it meet the requirements?, Is it the right product?, and Does it function according to design?. Whereas, verification asks the question does this software meet a given specification under given assumptions?.

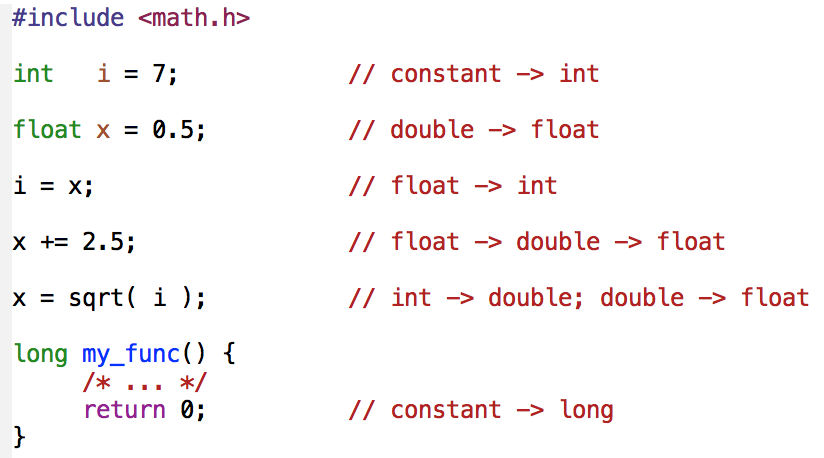

One requirement for nearly any stronger property is to first show the code satisfied type safety. A program is considered type safe if and only if the only operations that can be performed on data are those sanctioned by the type of data. For example, we can look at the following program where integers, floats, and doubles are being implicitly cast amongst one another. In a type safe program, an integer could never be treated as a float (more importantly, a pointer type would never be treated as a non-pointer, and vice versa).

While the below program is well-typed, it illustrates some of the challenges faced when attempting to show type safety for typical C code:

There are a variety of formal concepts that are used in program verification including model checking, deductive verification, equivalence checking, theorem proving, and correctness by construction. The problem that arises, though, is that some of these rely on concepts that simply are not feasible in commercial-level programs. For example, model checking exhaustively checks whether a model of the program meets a given specification, but every behavior in the program must have proper transitions and states resulting in the state explosion problem. Put simply, as more behaviors are added to simple programs, the number of states in the model exponentially grows. Due to this, it is challenging to scale model checking to large systems, although extensive work has been done toward this goal and often complex programs can be abstracted in ways that enable useful model checking.

One popular approach to verification is to use expressive type systems. Using the Curry-Howard isomorphism, we are able to directly relate programs with mathematical proofs and therefore have the ability to create refinement types which are types endowed with a predicate which is assumed to hold for any element of the refined type. What this means is that we can define our program using refinement types to encode the safety properties of the system which can be type-checked and proven to hold that safety property true.

There’s a lot that can be done with formal verification and TLS is a great possible use for fixing errors created during implementation. There are actually a number of projects for verifying parts of TLS out there that are attempting to combine together to formally verify TLS as a whole. This joint project is known as Project Everest. Everest is a pretty lofty goal considering the difficulty of formally verifying even small-level programs and scripts, but has made considerable progress towards building a fully verified TLS implementation. The eventual goal of Everest is that when all the projects are combined together, they will generate a C library that not only implements TLS 1.3 but is also proven secure, essentially circumventing any possible flaws in the TLS protocol.

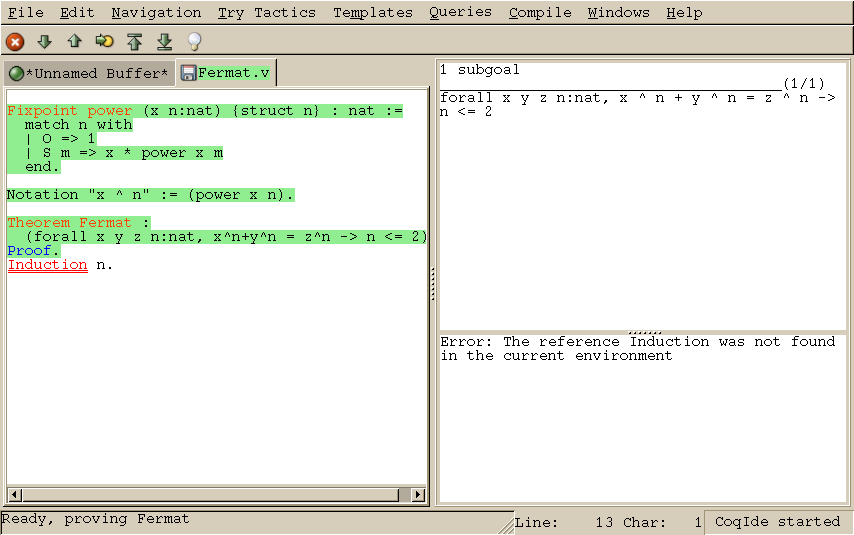

Coq

Team Cinnamon displayed a formal verification software called Coq during class. Coq is an assistive tool for the verification of code. The software implements a variety of mathematical and computational strategies to work, in combination with the user, to formally verify code, functions, and theorems. In class we ran through a couple of sample proofs to display the functionality and potential of Coq as a verification software and to give an example of how a formal verification software works.

A download for Coq is available at https://coq.inria.fr/ which offers different versions for different architectures. We used the CoqIDE which allows for editing Coq files with helpful syntax highlighting and is useful when trying to first learn Coq. For a good introduction to Coq, as well as number of exercises for what to do, a group at UPenn has a great lab focused on Coq.

Source: The Coq Proof Assistant

Coq is extremely powerful software to help formally verify computer programs, but it can be difficult to learn, requiring a change in mentality for most programmers. For any problems or difficulties found when using Coq, there is plenty of documentation available within Coq’s Reference Manual.

Conclusion

Implementing cryptographic protocols correctly remains a huge challenge. There are tools available now that can prove interesting properties about software, including even the absence of certain types of side channels. It is important to remember, though, that anything we prove about a program is a proof about a property based on a model of the execution environment, and assumptions about adversary capabilities. As verification and testing tools get more sophisticated, those models can come increasingly close to the actual environment and capabilities of real adversaries, but today, there remains a large gap.

Introduction

So far, we have learned some real-world TLS attacks and how they bring potential vulnerabiliting in different situations. Since the core SSL/TLS technology has persisted as the basis for securing many aspects of today’s Internet for more than twenty years, including data transfer, user passwords, and site authentication, it is important to also consider issues beyond the protocol.

This week, we’ll go on to discuss practical issues with TLS including HTTPS, certificates, key management and an attack called SSLstripping.

Trust Issues and Enhancements

SoK: SSL and HTTPS: Revisiting past challenges and evaluating certificate trust model enhancements, Jeremy Clark and Paul C. van Oorschot, IEEE Symposium on Security and Privacy (“Oakland”), 2013.

Certificates

The TLS protocol enables a client and a server to establish and communicate over a secure channel. Assuming such a secure channel can be created, authenticating the server still remains a challenge. HTTPS attempts to solve this problem using certificates, which bind public keys to servers. Web browsers trust certificates that are issued by certificate authorities (CAs).

A certificate is bound to a server through its domain name. When requesting a certificate for a domain name from a CA, the requester is challenged to demonstrate control of the domain name. Upon successful validation, the CA will digitally sign a domain validated (DV) certificate for the entity.

Stronger verification techniques are available due to security issues with hostname validation. A common verification technique used by CAs is to send an email to an email address associated with the domain. This becomes an issue when an attacker is able to spoof DNS records, such as through a DNS cache poisoning attack. Issues may also arise when an attacker is able to register an email address at the domain. For example, an attacker was able to convince a CA that owned login.live.com by registering sslcertificates@live.com. In response, CAs offer extended validation (EV) certificates to entities willing to pay a premium and undergo more stringent validation.

Anchoring Trust

Although anyone can create a certificate for any site they want, clients should only trust a certificate if it has been signed by a CA they already trust. Browsers com pre-configured with a default list of CAs known as trust anchors. Mozilla’s Firefox 15 browser includes approximately 150 trust anchors.

Users may also add additional trust anchors to their system. This is commonly done by organizations in order to MITM their users HTTPS connections to perform content inspection, or by users who want to inspect the contents of their own HTTPS requests.

Because any trust anchor is able to issue trusted certificates for a website, an adversary need only target the weakest CA in order to obtain a fraudulent certificate. Furthermore, governments are in a position to compel CAs to create valid certificates to be used in MITM attacks.

To prevent misuse of fraudulent certificates, webservers may use HTTP Public Key Pinning (HPKP) to remember a presented certificate, and warn the user if a different certificate is ever presented for the same domain in the future. This way, even if an adversary has obtained a certificate that is trusted by a browser, they will be unable to perform a MITM attack. However, this technique requires a user to blindly trust the first certificate that the webserver pins. An effective alternative is for browser vendors to include a list of certificates to pin within the browser.

Transitivity of Trust

In addition to signing certificates for webservers, trust anchors can issue certificates allowing other organizations to also act as CAs. While Firefox includes nearly 150 trust anchors from approximately 50 organizations, hundreds of organizations, including the US Department of Homeland Security, are trusted intermediate CAs.

Client software does not generally maintain a list of intermediate CAs. Rather, they use a chain discovery mechanism to trace a server’s certificate back to a trust anchor. Such a chain must be carefully validated to check that each intermediate CA occurring in the chain has actually been granted authority to sign further certificates. This check was previously skipped by Microsoft’s CryptoAPI and Apple’s iOS.

One way to ensure that every intermediate CA is visible to users is to publish a list of every valid certificate. This way, clients are able to know about intermediate CAs before their certificate is encountered. This is important because intermediate CAs can have just as much power as the trust anchors.

Maintenance of Trust (Revocation)

Sometimes a certificate needs to be revoked, such as when a site is compromised or abandoned, the domain name is exchanged, or the CA becomes aware of mistaken issuance. This revocation status must be readily available through the CA, either through a certificate revocation list (CRL) or an online certificate status checking protocol (OCSP).

Because this revocation information may be unavailable, browsers choose to accept certificates when the information cannot be located. Thus an adversary who is able to prevent a browser from obtaining revocation information may be able to cause a revoked certificate to be accepted.

Besides the list of all valid certificates described above, one way to combat unreliable revocation is for webservers to provide timestamped OCSP status reports, a technique known as Certificate Status Stapling. Alternatively, if certificates were issued to be valid for a shorter time, the impact of missing a revocation is lessened. Currently, certificates are often valid for years, but a 2012 proposal calls for certificates that remain valid for only four days, eliminating the need for a revocation mechanism (Topalovic et al.).

Indication and Interpretation of Trust

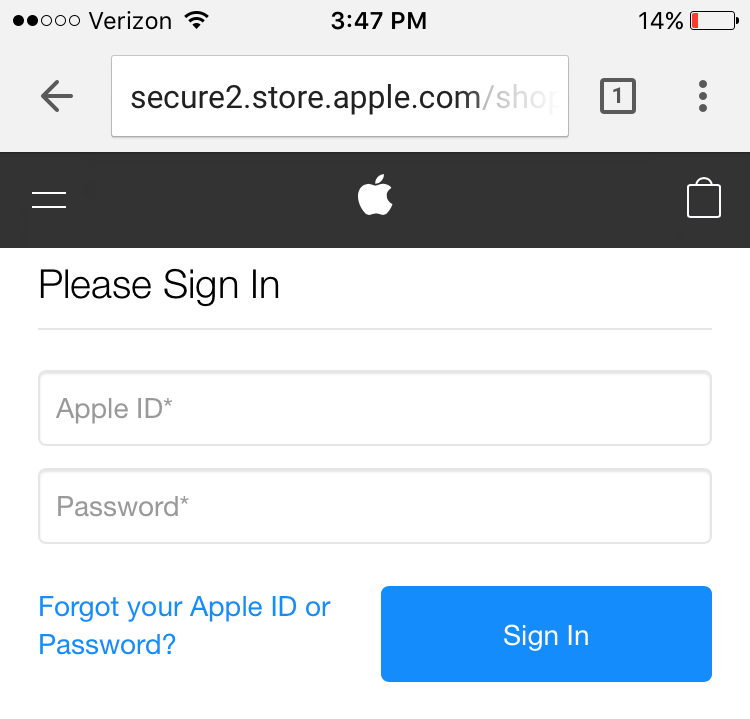

When a user browses to a website, they are expected to verify that they are connecting over HTTPS. This is indicated to the users through the https:// at the beginning of the URL in the address bar, and the green lock icon displayed by the browser. This icon may typically be clicked on to display more information about the website’s certificate. However, studies have shown that many users do not look for these indicators, and may even assume a page is secure based on the type of information being displayed.

Even when a browser displays a warning for a failed HTTPS connection, many users will click through and still log into the site. This may be due to users not understanding the certificate warning, not understanding the risks of visiting a site with an invalid certificate, or making a decision to visit the site anyway despite understanding and weighing the rists. Another common warning is the mixed scripting warnings, indicating that Javascript is being loaded over plain HTTP but being run within the HTTPS site’s privileges.

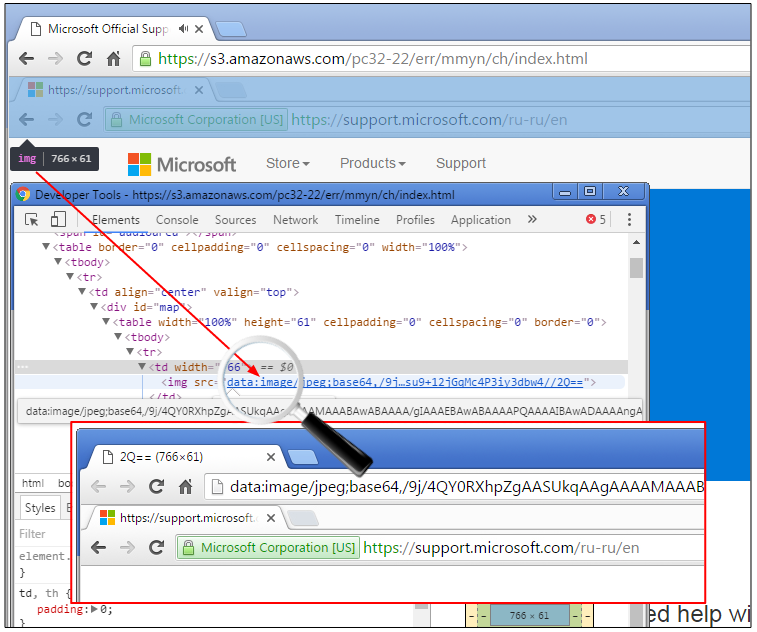

If an adversary expects a user to look for HTTPS indicators, they may be able to spoof common security cues. Some users believe an image of a lock on the website is a sign of a successful HTTPS connections. A more involved example is shown in the image below, where an attacker has simulated a browser address bar, complete with the HTTPS indicators that come with a valid EV certificate.

Fake Address Bar (Image from Malwarebytes)

CONIKS and Certificate Transparency

CONIKS: Bringing Key Transparency to End Users, Marcela S. Melara, Aaron Blankstein, Joseph Bonneau, Edward W. Felten, Michael J. Freedman, USENIX Security ‘15

CONIKS is a key management system intended to reduce the workload on clients to verify keys for secure communications. It’s an extension of the existing certificate transparency logs for webservers to end users. CONIKS simultaneously helps address the issue of service providers tampering with keys and of trust establishment that would otherwise be done out-of-band manually. The system is intended to prevent equivocation of keys, prevent the addition of unauthorized keys, and allow for transparent and public verification all while being efficient for users.

CONIKS is motivated by a desire to increase the use of end-to-end encryption, which has traditionally struggled with key management. Systems like WhatsApp and Apple’s iMessage use centralized storage of public keys which is vulnerable to key removal, key changing, or server takeover. Furthermore, many systems have no way (beyond clunky manual steps) to verify contacts are who they claim to be.

Design

The design of CONIKS involves several non-distinct participants: service providers, end users, and auditors. Service providers manage their own individual namespace of name (e.g., alice@host.com) to key bindings. While not assumed to be trustworthy, service providers are expected to have a reputation to uphold. End-users are the clients and intend to communicate with each other securely. Clients require only a relatively accurate clock and a usable network connection. They are also responsible for serving as auditors who track the key log for forgeries, invalid updates, and new unsolicited keys.

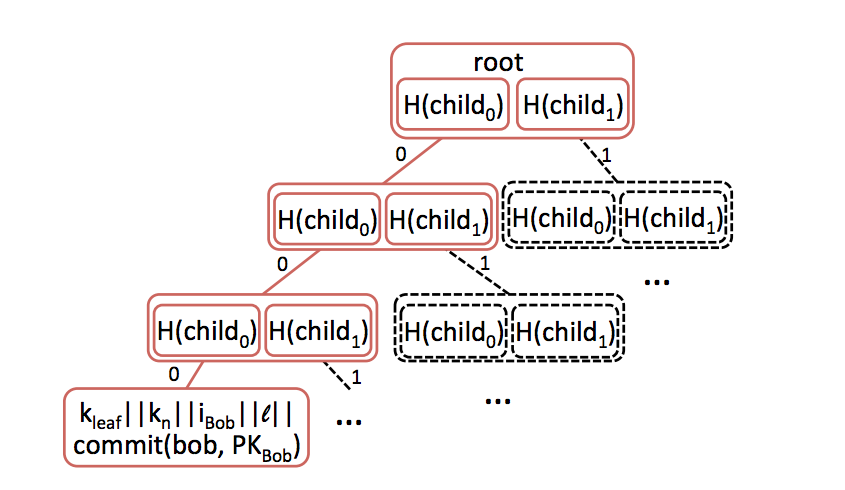

Each service provider constructs its directory of name → key mappings as a Merkle binary prefix tree, with each tree node representing a unique prefix.

Merkle Prefix tree (image from CONIKS paper

As in other Merkle trees, interior nodes represent hashes of their left and right children. Leaf nodes are hashed over a nonce (kn), a node index, the tree depth, and a cryptographic commitment of the user’s name and public key. Empty or placeholder nodes are hashed similarly, but instead include a different constant, kempty.

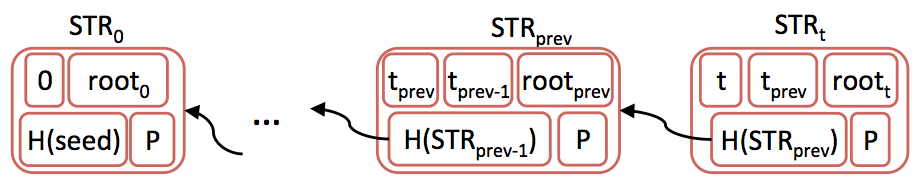

Signed STR chain (Image from CONIKS paper)

At regular intervals, or epochs, the service provider signs the merkle root of the previous tree and a sequentially increasing number to indicate the order of the blocks. This helps ensure that service providers cannot change the historical record easily, and also must maintain a changed STR chain indefinitely.

Common Operations

Registration in a CONIKS system occurs when the user sends their name and public key to the service provider. Since the server only published a signed record every epoch, it will issue a “temporary binding” in the mean-time to validate the key, signing the user key, name, and eventual index.

To look up a key, clients will consult the server for a given name and receive the matching public key and a STS proof of inclusion, consisting of all the hashes from the position of the key on the way up the tree. Since interior nodes consist only of hashes of the left and right nodes, the client can verify that the key is at the position the server claims, or that the key is truly missing if the server claims it is.

A general flow for secure communications therefore looks something like this:

- Alice contacts the service provider (Carol) and requests the public key for

bob@host.com. - Carol returns the public key and the proof of inclusion hash chain.

- Alice computes the merkle root of the tree and compares to her last known root from the STR chain.

- After proving Carol gave the correct key, Alice encrypts her message with Bob’s public key and sends it.

Auditing

One of the most important features of CONIKS is the ability for anyone to audit the name → key mappings. Indeed, clients are encouraged to regularly audit their own keys to ensure they have not been compromised. Auditing works much like key lookup — the server is consulted for the key mapping to the given name. Clients who are also auditing will confirm that the returned private key matches the saved one they possess, and that the keys have not changed in an authorized way between epochs.

Considerations for deploying CONIKS

Initial key submission window

It’s important to note that until the next STR is published, clients won’t be able to communicate using their public key, as nobody else will have seen it. However, clients can audit their own keys to prevent any malicious actors from changing their initial key upload.

‘Whistleblowing’

The CONIKS protocol currently doesn’t provide any way for clients to contact each other if they detect malicious activity either in general or on the part of the service provider. If their keys are hijacked, they are responsible for communicating that information to others on their own, which could be over an unsafe channel.

Key Change or Revocation

CONIKS doesn’t currently provide any way for users to update or revoke their keys directly. One easy path would be for users to sign some message indicating they wish to remove the previous key. However, if a user lost their key they would be unable to revoke their old one.

SSL Stripping

Defeating SSL Using sslstrip 2009 Black Hat DC presentation by Moxie Marlinspike

In this part of the blog post we further explore the sslstrip attack, presented to the class by Team Sesame on February 10th, 2017.

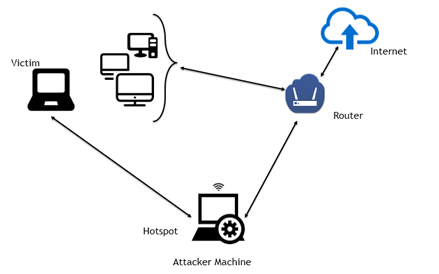

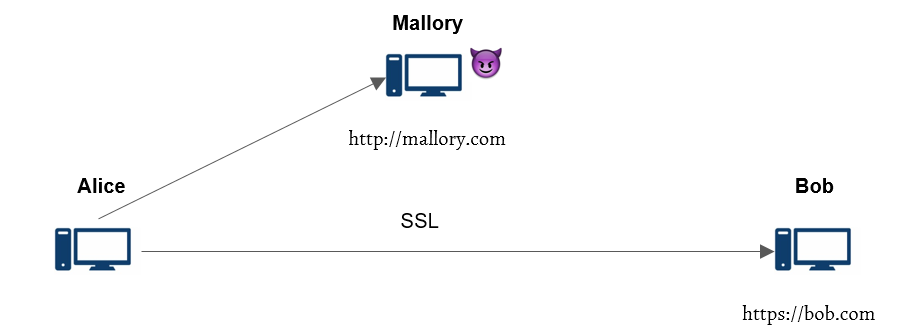

In-the-Middle Attack Setup (Image from avicoder)

Overview

The sslstrip attack is both an in-the-middle attack and protocol downgrade attack that relies on websites not implementing HSTS and also browsers’ inability to prevent users from POST’ing sensitive data to HTTP websites.

The sslstrip python module, when used in conjunction with an MITM framework, replies to the victim’s HTTPS requests with HTTP versions of the same page silently stripping the S. On modern browsers the only visual cue is the lack of HTTPS:

Screenshot of Chrome on iPhone 7

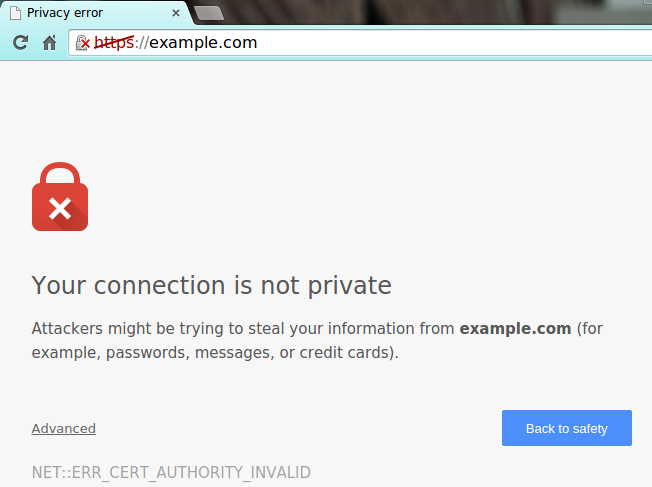

This differs from other HTTPS MITM attacks whereby an attacker forces the victim to connect to a fake access point where tools like mitmproxy can be then used to sign forged certificates for websites on the fly.

However, most browsers have mechanisms to protect against this like HTTP Public Key Pinning (HPKP) and browser warnings:

Self signed SSL certificate warning in Google Chrome, image courtesy of Inmotionhosting.

Necessary Requirements

In order for an attacker to obtain victim credentials for a given HTTPS website using sslstrip

- The attacker must be on the same LAN as the victim (necessary to obtain MITM status)

- The HTTPS website the victim accesses must have initiated the connection first via HTTP

- The given website must not be on victim’s browsers HSTS Preloads (supported by all modern browsers, this list includes the Google domains and ~7500 other sites)

Sslstrip works by listening for HTTP 301 “Moved Permanently” (i.e., Redirect to HTTPS). So unless the victim explicitly types in https:// (HTTPS to begin with) or the website is on the browser’s HSTS Preloads, or the website is HTTPS only, the 301 will be issued and at that point sslstrip will intercept this response and instead relay back to the victim a HTTP version of the HTTPS site.

So, Requirement 2 is necessary for sslstrip to work. In the case of the website being on the browser list of HSTS preloads, then the first request over HTTPS is is never dispatched but rather internally redirected by the browser to the HTTPS version which is why Requirement 3 states the given website must not be on the HSTS Preload list.

Countermeasures

The most obvious countermeasures include:

- Browser Indications, SSLight, other addons?

- Server: HSTS (HTTP Strict Transport Security) Preloads

- Server: HTTPS only

While improvements have been made to sslstrip such as using different domains like wwww (and various countermeasures like key pinning and browser displays), in present day the original sslstrip (2009) does not work against sites with HSTS enabled like facebook.com and gmail.com. Surprisingly though, a 2016 article claimed only 1 in 20 HTTPS servers implemented HSTS correctly!

Sslstrip is an easily-deployable and effective attack “in the wild” because of but not limited to session hijacking and the fact most browsers do not alert users they are submitting over http (or conversely most users do not notice http:// when submitting sensitive info). In addition, password reuse is widespread and if credentials were obtained from say apple.com or a bank they could be tried against more sensitive websites which do support HSTS (enable two factor authentication!!).

Further Reading:

Writeup describing modern browser and website protections against MITM attacks:

https://www.troyhunt.com/understanding-http-strict-transport/

Try it out yourself! This blog post describes how an attacker on Mac OS X could use sslstrip to gather credentials over network. I learned both my online banking website and apple.com were vulnerable to sslstrip (i.e. the domains of these sites were not on my browsers HSTS preload list).

http://techjots.blogspot.com/2012/11/sslstrip-on-mac-os-x-mountain-lion.html

Last week we learned about Padding Oracle Attacks which use side-channel information related to ciphertext message padding in order to deduce the underlying plaintext message in a TLS communication. This week we will learn about Downgrade Attacks that force a TLS server to choose weaker encryption and protocol suit thereby making it vulnerable to attacks like man-in-the-middle.

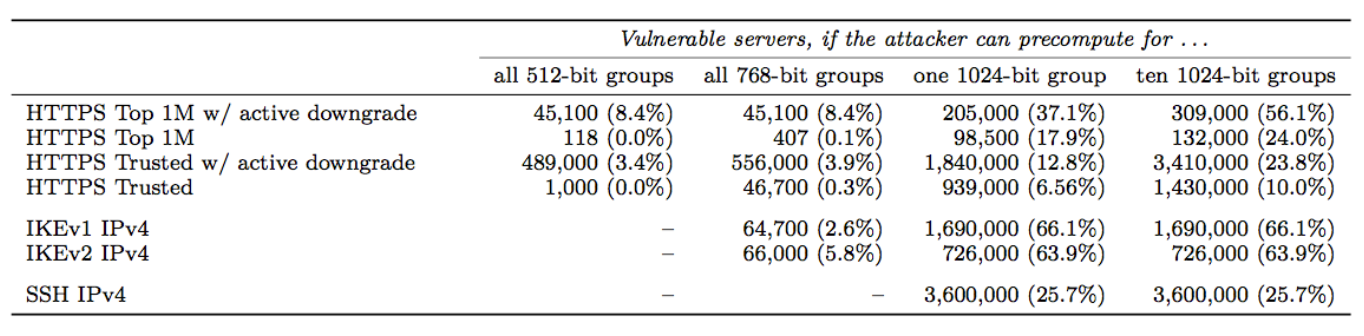

We begin with a brief introduction of Diffie-Hellman Key Exchange protocol commonly used in TLS and then describe the Logjam attack on Diffie-Hellman protocol which relies on pre-computation of discrete-logs of a 512-bit prime used in the protocol. Next we discuss about State-level Threats to Diffie-Hellman, where we show that the pre-computation of discrete-logs is in the reach of current academic computation power. The practical consequence is that 8% of the top 1 million websites that use HTTPS can be broken in real time.

In the remainder of the article, we discuss about Bleichenbacher Attack which is a padding oracle attack on PKCS#1 v1.5 padding used in SSLv2. We conclude with Drown Attack that uses Bleichenbacker attack to gain access to RSA key of a TLS communication.

Diffie-Hellman Cryptanalysis

Diffie-Hellman is a cryptologic method used to confidentially generate a shared secret (encryption key) between two parties in a conversation. Because the shared secret is used to encrypt message traffic, the integrity of Diffie-Hellman is crucial to the security of TLS, where the confidentiality of communication depends heavily on the process of securely generating a shared encryption key.

Background: Diffie-Hellman Key Exchange

The genius of Diffie-Hellman lies in its adherence to the principle of perfect forward secrecy. The asymmetric keys used to perform the key exchange can be deleted after the key exchange is completed, so there is no risk of a later compromise enabling an attacker to break previous traffic. The agreed shared key is never transmitted, saved, or otherwise made observable across a communication channel, even if the adversary were to collect all encrypted data in transit and recover all material stored at the endpoints, it would still not be able to break the encryption.

The mathematical underpinnings of Diffie-Hellman are relatively simple. See the following image for an illustration of the exchange that occurs when generating a shared secret. Note that the secret values, a and b, are never transmitted nor shared between Alice and Bob.

The Diffie-Hellman Problem

The Diffie-Hellman problem (DHP) is as follows: Given an element \(g\) and the values \(g^x, g^y\) what is the value of \(g^{xy}\)?

Clearly, if this problem were easy to solve, Diffie-Hellman would be useless, since any eavesdropping adversary could then collect both \(g^x\) and \(g^y\), compute \(g^{xy}\), and subsequently decrypt all of Alice and Bob’s communication. The Discrete Logarithm Problem (DLP) is thus by far the most efficient means to solve DHP and requires that the eavesdropping adversary compute \(x\) given \(g\) and \(g^x\) – still believed to be a hard problem in general. But, if \(p\) is a weak prime (as explained below), it can solved in a reasonable amount of time with available computing resources.

Adrian et al. published a paper in 2015 that demonstrated a weakness in Diffie-Hellman key exchange in the Handshake Protocol of TLS. In order to perform cryptanalysis in such a situation, an attacker must solve DLP by computing arbitrary discrete log values in real-time. While it is not known how to calculate discrete logs efficiently (and believed to be hard), the attack can be accelerated using precomputation.

Index Calculus

While the logic behind the precomputation cryptanalysis is based on the General Number Field Sieve, we examine Index Calculus, a simplified version of the same concept. Index Calculus involves two steps: sieving and linear algebra. In the sieving step, the cryptanalyst chooses a multiplicative group of numbers and attempts to find numbers that factor completely over this group. We can then express these numbers as linear combinations of logs of the multiplicative group elements. By finding many such numbers, we obtain equations in terms of logs of multiplicative group elements. In the linear algebra step, we can use these equations to solve for values of the logs. This allows us to generate a database of precomputed logs.

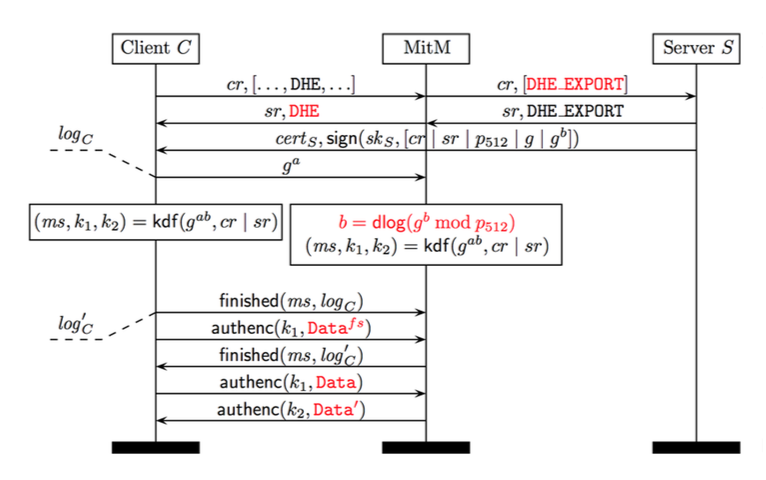

Active Downgrade

Using precomputed discrete logs, an attacker can perform an active downgrade attack on a TLS connection. Operating as an in-the-middle attacker, the attacker intercepts a Client Hello message and alters it to instruct the server to use export-grade Diffie-Hellman, which uses 512-bit keys rather than 1024-bit or higher, which allows the attacker to use the precomputed logs. During the Handshake Protocol, the attacker is able to solve the discrete log problem and recover the master secret of that connection. This allows the attacker to communicate directly with the client over an encrypted channel, while the client still thinks he/she is communicating with their intended server.

The Logjam Attack: Step-by-Step

The Client sends a

Client Hellomessage to the Server. This includes a list of all supported ciphersuites that can be used to generate the shared secret and requests that one be selected by the Server to begin the Diffie-Hellman exchange.The adversary intercepts the

Client Hellomessages and alters the available ciphersuites to only include 512-bit Diffie-Hellman, then forwards the altered Client Hello to the Server.The Server receives the

Client Hello. Since only one ciphersuite is shown to be supported by the Client, the Server agrees to 512-bit Diffie-Hellman and generates a 512-bit prime \(p\) to be used in the key exchange.The adversary intercepts the ciphersuite confirmation message from the Server and alters it to reflect the client’s original ciphersuite preference, selecting full-strength DHE. It also eavesdrops on the Server’s Diffie-Hellman communication to discern \(g\) and \(gb\).

The Client thinks it has received the proper Diffie-Hellman information from the Server and begins its half of the Diffie-Hellman exchange. Note that the 512-bit prime \(p\) is still considered a valid prime for 1024-bit DHE. Meanwhile, the adversary is hard at work calculating the discrete log of \(g^b \mod p_{512}\). Multiple factors can contribute to reduce the time-complexity of this process.

Once the adversary has determined the value of \(b\), the Client and adversary begin communicating as though the adversary is the Server.

The time-complexity of precomputation using the number field sieve is entirely dependent on the prime number used; as a result, any connections using the same prime can be quickly broken. The researchers behind Logjam detected that millions of HTTPS, SSH, and VPN servers use the same prime numbers for Diffie-Hellman key exchange, rendering supposedly-secure communications vulnerable to Logjam.

Weak Primes

Consider a prime number \(p\). In 1978, Pohlig and Hellman demonstrated that if all factors of \(p-1\) are less than \(\log^c p\), the problem of solving the discrete logarithm \(\mod p\) is in \(P\). In general terms, this means an attacker can greatly reduce the complexity of the discrete logarithm problem and thus conduct the Logjam attack in a shorter period of time. We’ll demonstrate with an example using a small prime.

Let \(p = 31\) and \(g = 3\). \(g\) has order 30 → \(g^{30} = 1 \mod p\)

The factors of \(30 = 2 * 3 * 5\) are too small for the prime \(p\) to be secure, allowing an attacker to convert the discrete logarithm problem \(\mod 31\) into problems \(\mod 2, 3, 5\). With the help of the Chinese Remainder Theorem, an attacker can easily find \(x\) given \(g\) and \(g^x\).

A strong prime \(p = 2q + 1\) (\(q\) is prime) avoids this problem of reducibility by ensuring that \((p-1)\div 2\) cannot be composite (and, by extension, that Pohlig-Hellman cannot obtain information from \(p\)).

This puts a server that supports export-grade DH and reuses weak primes at severe risk to a motivated attack.

State-Level Threats to Diffie-Hellman

Current Situation

In recent years, the general bar for internet security has raised substantially. HTTPS is now the norm for most heavily trafficked websites and is spreading through services such as Let’s Encrypt, that allow for domain owners to easily get SSL certificates for a website at no cost. In general, this trend looks positive: for key exchange protocols, stronger 768 and 1024-bit groups are now the norm rather than the exception. Individual users and institutions have also begun to better understand the need for security and IPSec Virtual Private Networks (VPNs) and SSH connects are being more broadly practiced.

Academic Power

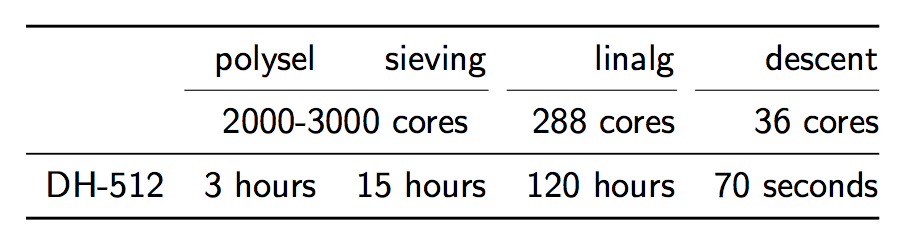

While standards have advanced and security has increased in general, cryptanalysis techniques and computational power have also increased. Although ideas such as Moore’s Law, that computing power at a certain price level effectively doubles every 18 months, the practical implications are not as often taken into account. These days, DH-512 is easily within the reach of “academic power,” that is to say, within the reach of institutions with access to midsize computing facilities or individuals who can afford a few hundred thousand dollars of computing.

With recent hardware, to “crack” DH-512 takes 2.5 core-years in the sieving phase, 7.7 core-years in the linear algebra phase, but only 10 core-minutes in the descent phase. So, given a 2000-3000 core cluster, all of the phases combined except the descent phase takes about 140 hours.

https://weakdh.org/weakdh-ccs-slides.pdf>

However, the descent phase only takes about 70 seconds. What does this mean? That after 1 week of pre-computation, an individual calculation can be completed in about 70s. If this is combined with something called a “downgrade attack,” which will be described below, connections to about 8% of top 1 million sites that use HTTPS can be broken in real time.

Sidebar: What’s a Core Year?

A “core-year” is a measure of the amount of work that an average computer core can complete in a year. To give a point of reference in terms of concrete cost, a single-core Amazon EC2 instance costs about $0.02 / hours. To run that core for a year would cost about $0.02 * (24 *365) = $175. A core-day and a core-minute are defined similarly.

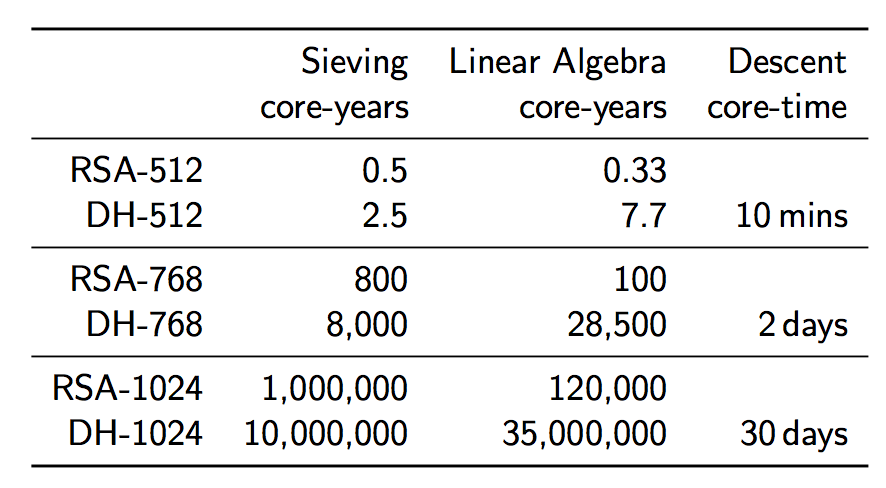

Although it may seem like this isn’t as serious of a problem, since current practice is usually to use DH-768 or DH-1024 –for example, 91.0% of IKEv2 servers support a 1024-bit connection– in actuality even those are vulnerable to attack. In 2009, a new record was achieved for integer factorization with a 768 bit integer factorization completed with academic resources over the span of 2 years. This would imply that breaking DH-768 takes about 36,500 core-years in pre-computation and 2 core-days in decent. As much as this sounds, it is only a few million dollars worth, which is well within reach of both moderately-funded academics and moderately-motivated adversaries (and peanuts for an organization like the NSA).

Structural Costs

Ok, so a DH-768 connection can probably be broken by a non-state-level actor, but what about a DH-1024 connection? Surely that is ok? Let’s take a look at the costliness of DH-1024 in comparison to 768-bit DH. The algorithmic time complexity increases by a factor of about 1220, the space complexity by a factor of 95, leaving us with this:

https://weakdh.org/weakdh-ccs-slides.pdf

Although the costs seem astronomically high, they are actually within the reach of a state-level actor. If we assume that some special purpose ASICs are developed to help speed up the sieving pre-computation such that it can be completed in one year (this would cost around $8 million), and we get access to, say, a Titan supercomputer for one year (at a cost of $122 million) to complete the linear algebra phase in one year, we find that we can complete all of our pre-computation for the small cost of $130 million dollars. Compare this cost to the budget of a state-level actor such as the NSA: their 2012 budget was $10.5 billion, making this computation just 1% of that budget.

What’s so Good About Breaking One Group?

But, you might object, what is the value in doing this pre-computation and breaking one group? Doesn’t this just mean that the NSA can only break a couple connections per year? Unfortunately, no. As Edward Snowden said, “If performing number field sieve pre-computations for at least a small number of 1024-bit Diffie-Hellman groups is possible, breaking any key exchanges made with those groups in close to real time is no difficulty.” This is because once the pre-computations are completed for a single group, that work can then be used to crack numerous connections.

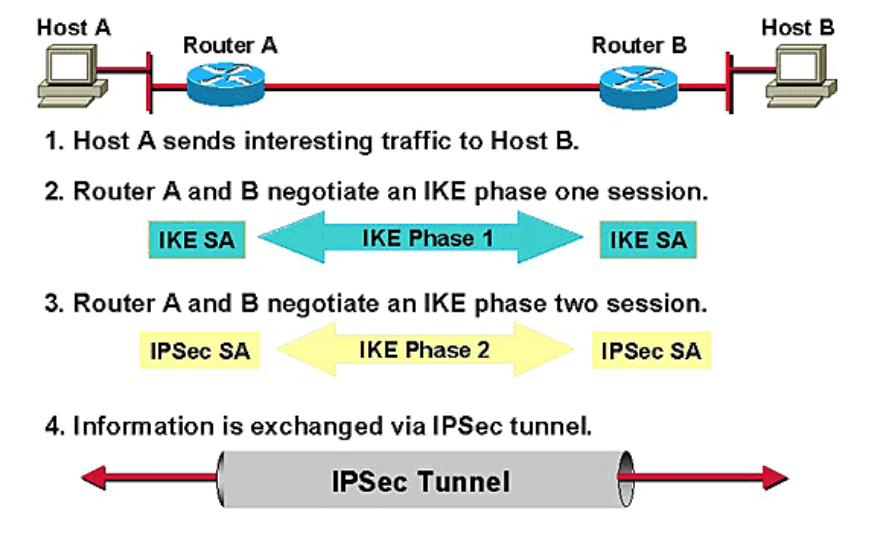

IKE (IPsec VPNs)

Let’s now take a step back and look at IKE, the Internet Key Exchange, which perhaps is the most vulnerable to these kinds of attacks. IKE is a protocol used, in the IPsec protocol, to create a “Security Association (SA),” which is just a set of shared security attributes between two network parties such as cryptographic algorithm being used, the algorithm mode, credentials, etc. In order to establish the SA, two parties go through a process like this:

https://weakdh.org/weakdh-ccs-slides.pdf

While the exact details of how the protocol works are not important, it is important to note that to perform IKE passive decryption, an adversary would have to have access to a known pre-shared key, both sides of the IKE handshake, and both the handshake traffic and ESP traffic.

Also important to note is that the vast majority of IKE systems use one particular 1024-bit DH group, the Oakley Group 2, for the protocol. We find that 86.1% of IKEv1 servers and 91.0% of IKEv2 servers support Oakley Group 2, and 66.1% of IKEv1 servers and 63.9% of IKEv2 servers chose Oakley Group 2 for the protocol. This means that a state-level actor with access to the pre-shared key, both sides of the IKE handshake, and both the handshake traffic and ESP traffic would be able to passively decrypt 66% of VPN server traffic…in near real-time.

Show me the Adversary

Is this all hypothetical? Does any such adversary actually exist?

A 2012 Wired article revealed information that the NSA, several years before 2012, made an “enormous breakthrough” in its ability to cryptanalyze current public encryption. While the exact details are not known, it may be reasonable to assume that the NSA completed the pre-computation for a 1024-bit DH group, such as the Oakley Group 2, allowing them passive decrypted access to a swath of internet traffic.

https://weakdh.org/weakdh-ccs-slides.pdf

https://weakdh.org/weakdh-ccs-slides.pdfThe impact of such a break to IKE has already been noted. Other protocols would also be vulnerable to the break. SSH, for example, supports Oakley Group 2, Oakley Group 14, or a server-defined group that is negotiated through a DH-GEX handshake. According to recent data, 21.8% of servers prefer Oakley Group 2, and 37.4% prefer the server-defined group. However, of that 37.4%, almost all of them just provided Oakley Group 2 rather than a real custom group. Thus, a state-level attacker that performed the break could passively eavesdrop on connections to 25.7% of all publicly accessible SSH servers.

Unfortunately, HTTPS connections are similarly affected. Of the top 1 million site that support DHE, 84% use a 1024-bit or smaller group, and 94% of those use one of five common groups. Thus, 17.9% of connections to the top 1 million sites could be passively eavesdropped with the pre-computation for a single 1024-bit prime.

Mitigations

Is there any hope that a connection can really be secure given this information? Luckily, some mitigations can be put into place. First, servers can move to using elliptic curve cryptography (ECC). A transition to a elliptic curve Diffe-Hellman key exchange (ECDH) with appropriate parameters would thwart all known feasible cryptanalytic attacks as ECC discrete log algorithms don’t gain too much advantage from precomputation. When ECC is not an option, it is recommended that primes at least 2048 bits in length be used. It would be ideal if browser vendors and clients raise the minimum accepted size for DH groups to at least 1024 bits. If large primes are not supported, then always use a fresh 1024-bit group to mitigate the efficacy of precomputation-based attacks. It is parameter reuse that allows state-level attackers to easily perform wide-scale passive decryption.

Bleichenbacher’s Padding Oracle Attack

Bleichenbacher’s padding oracle attack is an adaptive chosen ciphertext attack against PKCS#1 v1.5, the RSA padding standard used in SSL and TLS. It enables decryption of RSA ciphertexts if a server distinguishes between correctly and incorrectly padded RSA plaintexts, and was termed the “million-message attack” upon its introduction in 1998, after the number of decryption queries needed to deduce a plaintext. All widely used SSL/TLS servers include countermeasures against Bleichenbacher attacks.

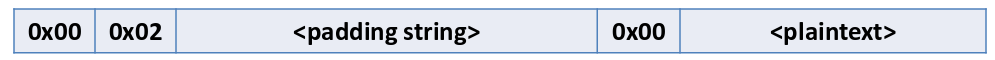

PKCS#1 v1.5 encryption padding

Bleichenbacher’s padding oracle attack relies on the structure of RSA PKCS#1 v1.5 padding. Although RSA PKCS#1 v2.0 implements OAEP, SSL/TLS still uses PKCS#1 v1.5. The PKCS#1 v1.5 encryption padding scheme randomizes encryptions by prepending a random padding string PS to a message k (here, a symmetric session key) before RSA encryption:

The plaintext message is k, \(l_k = |k|\). The encrypter generates a random byte string PS, where |PS| ≥ 8, \(|PS| = l_m − 3 − l_k \) and

0x00\( \notin{PS[1],…,PS[|PS|]}\)The encryption block is \(m =\)

00||02|| \(PS\) ||00|| \(k\)The ciphertext is computed as \(c = m^e\mod{N}\). To decrypt such a ciphertext, the decrypter first computes \(m = c^d \mod{N}\). Then it checks whether the decrypted message m is correctly formatted as a PKCS#1 v1.5-encoded message. We say that the ciphertext c and the decrypted message bytes \(m[1]||m[2]||…||m[l_m]\) are PKCS#1 v1.5 conformant if:

\( \qquad m[1] || m[2] = \)

0x00||0x02and0x00\(\notin {m[3],…,m[10]} \)If this condition holds, the decrypter searches for the first value i > 10 such that \(m[i] =\)

0x00. Then, it extracts \(k = m[i+1]||…||m[l_m]\). Otherwise, the ciphertext is rejected.

In SSLv3 and TLS, RSA PKCS#1 v1.5 is used to encapsulate the premaster secret exchanged during the handshake. Thus, k is interpreted as the premaster secret. In SSLv2, RSA PKCS#1 v1.5 is used for encapsulation of an equivalent key denoted the master_key.

Bleichenbacher attack

Bleichenbacher’s attack is a padding oracle attack; it exploits the fact that RSA ciphertexts should decrypt to PKCS#1 v1.5-compliant plaintexts. If an implementation receives an RSA ciphertext that decrypts to an invalid PKCS#1 v1.5 plaintext, it might naturally leak this information via an error message, by closing the connection, or by taking longer to process the error condition. This behavior can leak information about the plaintext that can be modeled as a cryptographic oracle for the decryption process. Bleichenbacher demonstrated how such an oracle could be exploited to decrypt RSA ciphertexts.

Algorithm

In the simplest attack scenario, the attacker has a valid PKCS#1 v1.5 ciphertext \(c_0\) that they wish to decrypt to discover the message m0. They have no access to the private RSA key, but instead have access to an oracle, \(\delta\), that will decrypt a ciphertext c and inform the attacker whether the most significant two bytes match the required value for a correct PKCS#1 v1.5 padding:

0x00~02 0

$$ \delta (c) = 0 \textrm{ otherwise}.$$

The attacker can take advantage of RSA malleability to generate new candidate ciphertexts for any s:

$$c = (c_0 · s_e)\mod{N} = {m_0 ·s}^e \mod{N}$$

The attacker queries the oracle with c. If the oracle responds with 0, the attacker increments s and repeats the previous step. Otherwise, the attacker learns that for some r, \(2B ≤ m_{0}s−rN < 3B\). This allows the attacker to reduce the range of possible solutions to:

$$ \frac{2B+rN}{s} ≤ m_0 < \frac{3B+rN}{s} $$

The attacker proceeds by refining guesses for s and r values and successively decreasing the size of the interval containing \(m_0\). At some point the interval will contain a single valid value, \(m_0\). Bleichenbacher’s original paper describes this process in further detail.

It is worth noting that the author implemented a proof-of-concept of this attack on a custom padding oracle implementation. Over a test set of various 512-bit and 1024-bit keys, between 300,000 and 2 million ciphertexts were required to find the message. While the details of the custom oracle are not known, it is reasonable to assume that this attack is feasible in more realistic scenarios.

Countermeasures

In order to protect against this attack, the reciever must not leak information about the PKCS#1 v1.5 validity of the ciphertext. The ciphertext does not decrypt to a valid message, so the decrypter generates a fake plaintext and continues the protocol with this decoy. The attacker should not be able to distinguish the resulting computation from a correctly decrypted ciphertext. In the case of SSL/TLS, the server generates a random premaster secret to continue the handshake if the decrypted ciphertext is invalid. The client will not possess the session key to send a valid ClientFinished message and the connection will terminate.

In addition, newer versions of PKSC#1 describe a new padding type, called OAEP, which uses hash function to add more internal redundancy. This greatly decreases the probability that random strings will result in valid padding, effectively preventing the attack.

Sources of Further Reading

Cryptopals Crypto Challenge: A Do-It-Yourself excercise on Bleichenbacher attack.

Efficient padding oracle attacks on cryptographic hardware

DROWN: Breaking TLS using SSLv2

DROWN attack is inspired by Bleichenbacher’s padding oracle attack over SSLv2 which could decrypt an SSLv2 RSA ciphertext. The attack was possible due to a flaw in SSLv2 protocol which revealed if the decrypted message was conformant with PKCS#1 v1.5 padding or not, thus acting as a padding oracle. The padding scheme is shown below where first two bytes are fixed 0x00 0x02 followed by 8 bytes of random padding string succeeded by a 0x00 byte. The remaining part of the message is the plaintext which may contain the key to be recovered. The padding scheme is shown below:

PKCS#1 v1.5 Padding Scheme

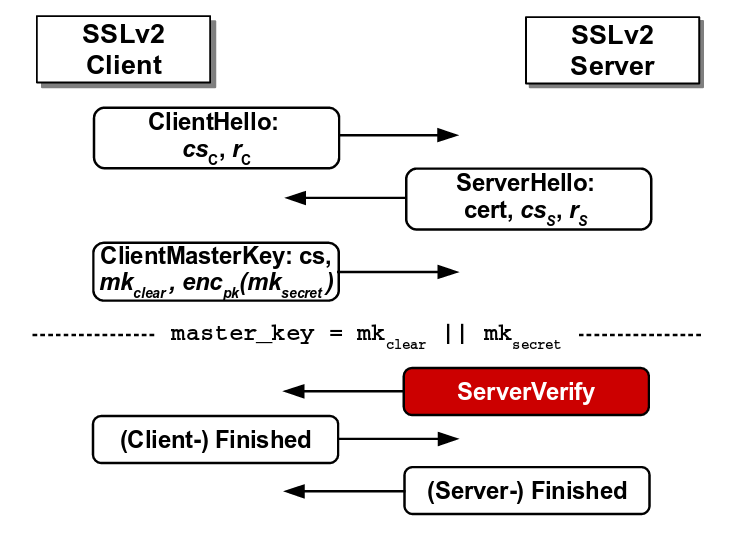

The client in SSLv2 protocol sends ClientMasterKey message to SSLv2 server which the server decrypts and responds with ServerVerify message which tells whether the ClientMasterKey message was conformant with the padding scheme.

The figure below depicts the SSLv2 protocol. The attacker can modify the original ClientMasterKey message and if the SSLv2 server confirms the padding, the attacker would immediately get to know that the first two bytes of the modified message is ‘0x00 0x02’. This way, the attacker can repeatedly modify the original message and query the oracle. After multiple successful guesses for modified message, the attacker can narrow down the guesses for the original message and recover the master_key.

Flaw in SSLv2 protocol where the server reveals the correctness of padding

Source: https://tlseminar.github.io/docs/drown.pdf

Moreover, SSLv2 allowed export-grade ciphersuites which supported 40-bit key. A Bleichenbacher attacker could brute-force the key by repeatedly querying the SSLv2 server.

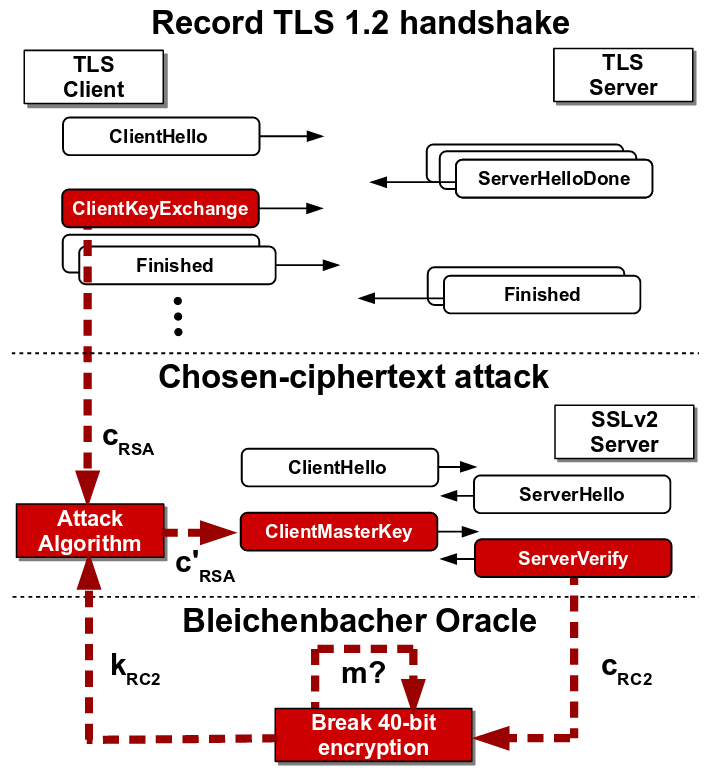

TLS patched the above flaws and (most) servers made the SSLv2 protocol obsolete. However, it was not uncommon for TLS servers to share same RSA keys with SSLv2 servers. This made the TLS servers vulnerable to a modified form of Bleichenbacher attack which uses a SSLv2 server as padding oracle to decrypt the shared RSA key. DROWN instantiated this protocol-level attack and decrypted a TLS 1.2 handshake using 2048-bit RSA in 8 hours at a cost of $440 on Amazon EC2. As if this wasn’t devastating enough, the authors of DROWN pointed out some implementation bugs in OpenSSL which lead to another attack called Special DROWN that could decrypt a TLS ciphertext in under 1 minute using a single CPU. Both the attacks are described below.

DROWN Attack

DROWN attack requires that a TLS server and a SSLv2 server share an RSA key. The attacker records multiple TLS handshake messages between a client and the TLS server. The aim of the attacker is to decrypt the RSA key of the TLS handshake. To do so, the attacker forces the client to establish a connection with an SSLv2 server having the same RSA key so that the attacker can initiate the Bleichenbacher attack to recover the RSA key.

Now the main hurdle for the attacker is that the format of TLS handshake message may not comply with PKCS#1 v1.5 padding scheme of SSLv2. The attacker converts the TLS ciphertext to SSLv2 ciphertext using the concept of trimmers introduced by Bardou et al. which reduces the size of the TLS message. The use of trimmers require repeated querying to SSLv2 server by shifting the message bytes. The recovered SSLv2 plaintext is then converted back to TLS plaintext which reveals the RSA key of TLS handshake.

SSLv2-based Bleichenbacher attack on TLS

Source: https://tlseminar.github.io/docs/drown.pdf

Special DROWN Attack

The OpenSSL implementation had two bugs which led to a more efficient Bleichenbacher attack on an OpenSSL implementation of SSLv2 server.

OpenSSL extra clear oracle: OpenSSL implementation allowed non-export cipher messages to contain clear_key_data which lead to potential overwriting of key bytes with null bytes. An attacker could vary the number of null bytes to decrypt the whole key one byte at a time.

OpenSSL leaky export oracle: OpenSSL in export cipher mode allowed valid oracle response for correctly padded message of ‘any’ length.

These bugs remained in OpenSSL implementation from 1998 up until its patch in 2015, when the authors of DROWN contacted the OpenSSL developers.

Prevention of DROWN

The attack is successful mainly because of the reliance on obsolete cryptographic practices. Export-grade ciphers only support 40-bit keys which are vulnerable to brute-force attack and hence it is crucial to disable export-grade ciphers and use safer ciphers (like AES_GCM) with longer key lengths (256-bits). PKCS#1 v1.5 padding leaks significant byte patterns and hence a better padding technique should be used. SSLv2 protocol includes the above obsolete cryptos and hence it should be scrapped and replaced with TLS 1.3. Lastly, the RSA public keys should not be shared among multiple servers or connections in order to deter the attack.

Introduction

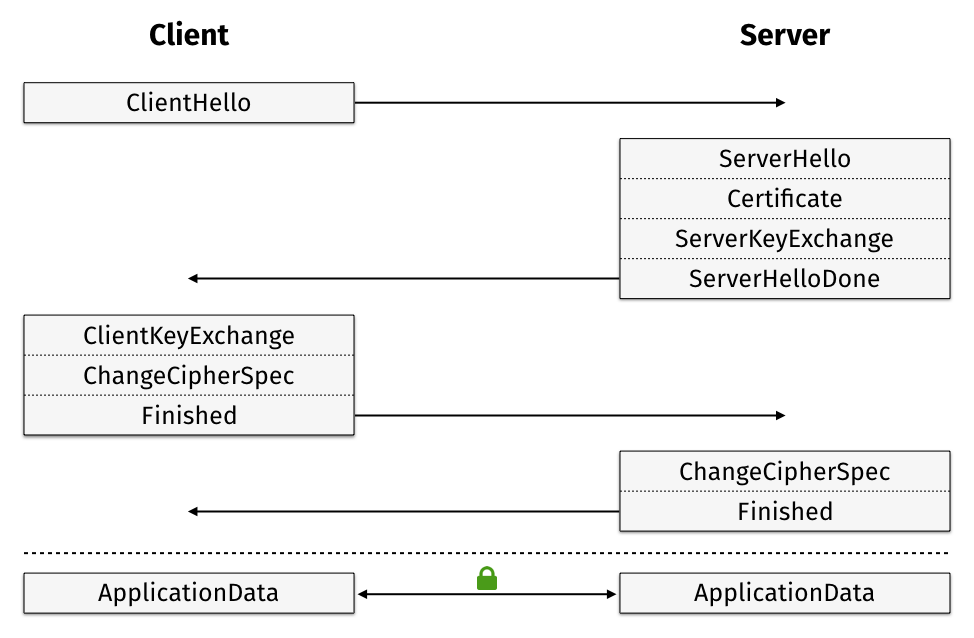

Last week, we examined how the Transport Layer Security (TLS) protocol provides a private channel between two devices by following the handshake and record layers protocols. The handshake layer establishes a symmetric key that both the client and the server could use in the record layer to encrypt and decrypt messages.

This week, we’ll discuss a real-world TLS attack, the Padding Oracle Attack, that takes advantage of our need for each message to be a particular set length. If the original message is not long enough, then we have to add padding for the CBC Mode Encryption to work. Because the padding is present, an attacker can chip away information on the ciphertext, one byte at a time, through analyzing the receiver’s error messages for the sender, response time, and general behavior.

We’ll start out by learning about how CBC Mode Encryption works.

Padding and CBC Mode

Analysis of the SSL 3.0 Protocol by David Wagner and Bruce Schneider (1997)

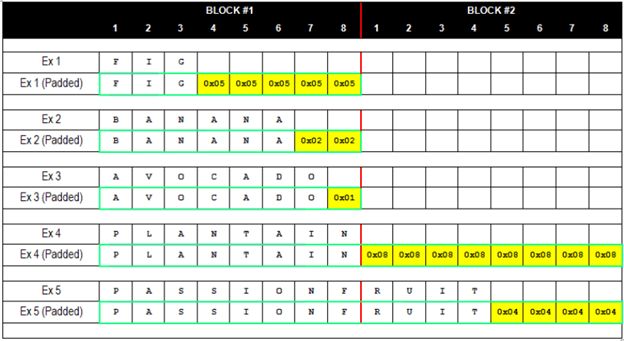

When AES-128 encryption is performed in cipher block chaining mode (CBC mode), the plaintext message is first split up into 16-byte (128-bit) blocks. It is often the case, however, that the length of the message is not perfectly divisible by 16. To account for varying message sizes, extra bytes, called padding, are concatenated after the end of the message to fill up the remaining quota for the final block.

These bytes are not chosen at random, however, and different cipher

modes prescribe different padding methods. In order to clearly mark

for the recipient where the message ends and the padding begins, the

padding follows a strict formatting pattern. With PKCS #5 padding as

used in TLS, if there are n bytes of padding, then each padding byte

contains the value n. For instance, if the last block contains 15

message bytes, the 1-byte padding contains 0x01; if the last block

contains 14 message bytes, the 2-byte padding contains 0x0202;

3-byte padding contains 0x030303; and so on.

Source: Gotham Digital Science

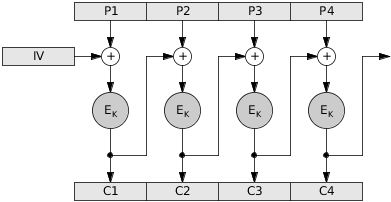

In CBC mode, the bytes of each plaintext block n are first XOR’ed with the encrypted bytes of the block before it, and their result is then encrypted. Block 1 is the obvious exception, which is XOR’ed with a fixed, random, or secret initialization vector (IV). Thus, for any block n > 1, where Ek is the encryption function, cn is the encrypted block n, pn is the plaintext block n, and cn-1 is the encrypted block n-1:

Source: Alan Kaminsky

However, the predictability of the padding format can turn out to be an extremely exploitable weakness for an active attacker. Under SSL/TLS protocol, when servers receive an encrypted message, their first step upon decryption is to check the validity of the padding; that is, determine if the numbers at the end of the last block represent the number of padding bytes. As soon as this step is completed, the server will return to the sender either an error code or an acknowledgment that the padding is valid. In this way, the server acts as an oracle to an active attacker, providing them with confirmations/rejections to inform their guesses.

Padding Oracle Attack

Security Flaws Induced by CBC Padding Applications to SSL, ISPEC, WTLS… by Serge Vaudenay

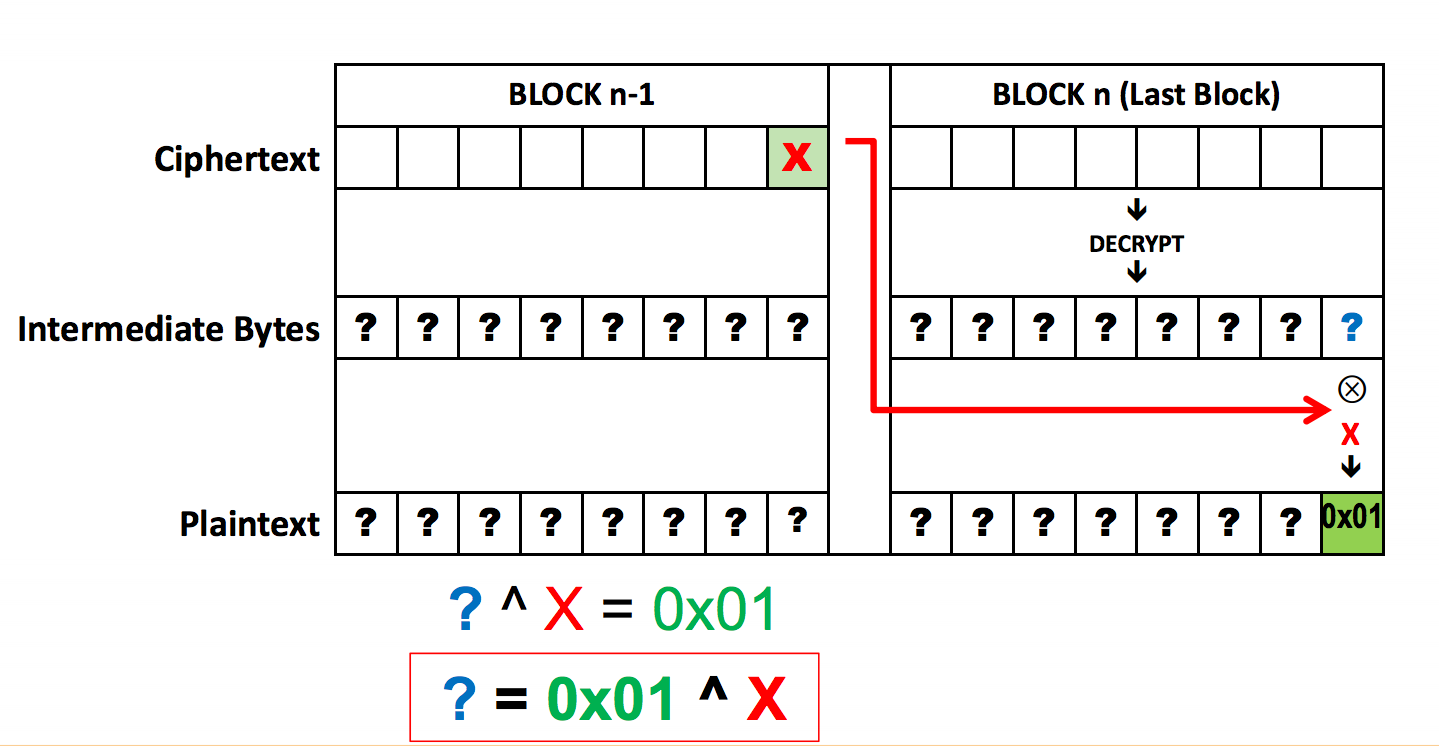

To begin, the attacker creates a Last Word Oracle. This first assumes

a 1-byte padding, so the format that the oracle would return as valid

is 0x01 XOR’ed with some particular value in the corresponding last

position in the n-1th block. Since the last byte, or word, can have

256 distinct values, the attacker can simply manipulate the n-1th

block, easily testing all values, until either the possibilities are

exhausted or the padding is returned as valid. If the possibilities

are exhausted, then the attacker instead tries 0x02, then 0x03,

and on until the padding returned is valid.

Source: Brian Holyfield, Gotham Digital Science

Once the attacker has learned the padding, a Block Decryption Oracle is constructed, using the values of the encrypted n-1th block to, byte by byte, guess the preceding byte, using the oracle’s pass/fail responses to confirm correct guesses. This method is then extended to decrypt all other blocks, as it is called on pairs containing a random block and a ciphertext block. (Again, the logical exception is the first block, assuming an independent initialization vector.) This is a terrifyingly efficient attack; to implement it, the attacker only needs (b * W * N)/2 trials, where b is the number of bytes per block, W is the number of possible bytes, and N is the number of blocks.

With the CBC Padding now added to the original ciphered message, attackers can alter this new message with blockwise operations in order to draw information out of the originally unreadable ciphertext. This information could potentially end up being authentication tokens, such as cookies, granting attackers personally identifiable information or the potential to hijack previous sessions.

BEAST: Plaintext Attacks Against SSL

Here Come The \(\oplus\) Ninjas by Thai Duong and Juliano Rizzo (2011)

In CBC block encryption, each plaintext block is XORed with the ciphertext of the previous block before being encrypted. An attempted guess at a plaintext block can be evaluated by encrypting the ciphertext prior to the block in question XORed with the ciphertext prior to the current block XORed with the guess; if the new ciphertext matches that of the block in question, then the guess is correct:

Guess G can be evaluated as equal to or unequal to plaintext Pj by setting Pi=G ⊕ Ci-1 ⊕ Cj-1 and checking whether or not Cj == Ci. An attacker would need to be able to view the encrypted messages and query the underlying CBC encryption system to be able to mount an attack based on this exploit.

Cryptographic systems are limited in their size and ability to store large plaintext messages, for this reason, most cryptographic systems encrypt messages block by block as they are sent. In the case where an attacker can append padding to a message before it is encrypted, an attacker can mount a blockwise chosen-boundary attack, in which the first byte of an unknown message is isolated by padding, enabling the attacker to guess at single bytes of a message rather than block-sized chunks.

The natural extension of this is to repeat the process such that once a byte of message has been guessed by the attacker, the padding is changed such that a single unknown byte of message is encrypted with padding and bytes known to the attacker, allowing them to continue guessing at single bytes of information.

Duong and Rizzo go on to describe the process whereby this attack could be mounted on HTTPS to obtain a user’s HTTP cookie. The attack only requires that an attacker can view encrypted messages, can cause the client to make arbitrary encrypted requests, and that arbitrary plaintext can be added to out-going requests. In the described attack, the user is made to request a package with a padded end such that the first byte of unknown information in the request is isolated in an encryption with only public information. The attacker then makes guesses at that byte of information, appending plaintext to the request and watching the encrypted channel for a message block that matches the block containing the unknown byte of information. At that point, the guess that resulted in that message block is identified as the correct guess. The process is then repeated with a smaller padding until the user’s request header (including their HTTPS cookies) is revealed to the attacker.

Figure: Attacker (Mallory) is able to sniff encrypted traffic, force Alice to send cookie-bearing HTTP requests, and insert forged plaintexts in the conversation.

There are a few issues mentioned associated with this attack, but by using one of a variety of plugins, an attacker could make the user open bi-directional communication with the server. On this communication channel, the privileges required by the attacker to mount the attack would be easier to gain.

Lucky 13: Plaintext Recovery from Injected Ciphertext

Lucky Thirteen: Breaking the TLS and DTLS Record Protocols by Nadhem J. Alfardan and Kenneth G. Paterson (2013)

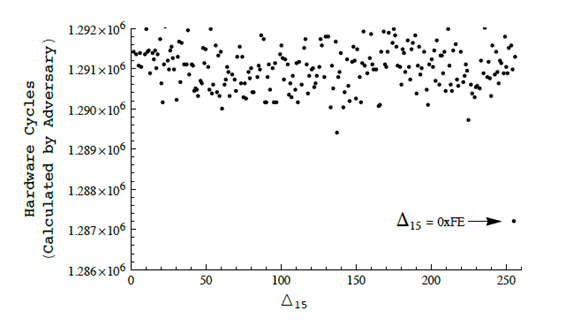

Similar in the vein of the BEAST attack using bitwise XOR operations to glean useful plaintext information, Lucky Thirteen offers yet another alternative means to get partial or even full plaintext recovery with just a simple man-in-the-middle injecting ciphertext into the original ciphertext. Based on analyzing how TLS and DTLS decrypt a given ciphertext, these attacks also rely on CBC-mode weaknesses.

The Lucky 13 attack relies on a timing channel introduced by the difference in processing time between TLS records with correct and incorrect padding, requiring only a standard in-the-middle attacker for execution and providing recovered plaintext in the most severe case. This would indicate a major security flaw, even in comparison to the aforementioned BEAST attack. BEAST required capabilities beyond simple MITM on the part of the attacker. As a result, the authors of the paper disclosed their results to all major vendors to allow for patching before publishing.

The name “Lucky 13” is a reference to a specific breakpoint in the size of the padding on a given message. Both TLS and DTLS use the HMAC algorithm to compute MAC tags for messages. HMAC operates using a compression function on messages with lengths equal to multiples of 64 bytes, so TLS and DTLS pad out messages with remaining space.

After subtracting 8 bytes for the length field and 1 byte of mandatory padding, we are left with a maximum message length of 55 bytes that can be encoded within one block. This means that messages less than 55 bytes can be processed with only four compression function evaluations, and that in general the number of compressions is equal to

$$\left\lceil\frac{L-55}{64}\right\rceil+4$$

for messages of L bytes. The compression function is relatively expensive, so the difference between 4 and 5 iterations is distinguishable in good conditions. It is also possible to submit multiple requests as described below to amplify the differences if necessary.

The authors detail first a distinguishing attack, and then a variation allowing for full plaintext recovery. All attacks rely on the timing channel. Specifically, the authors describe in detail how it is possible to place a target ciphertext block at the end of an encrypted record, causing the decryption function to interpret the plaintext block corresponding to the ciphertext block as padding. Thus the amount of time required to process that block depends on plaintext bytes, leaking information.

Figure: Graph showing the differences in timing due to the number of compressions necessary for varying lengths of bytes.

Source: http://www.isg.rhul.ac.uk/tls/TLStiming.pdf

Conclusion

Through these readings and their respective explanations, we see that cryptographic protocols are often broken and need to be patched. There is always some threat model out there looking to exploit the first sign of weakness to decrypt and listen in on what should be a secure, encrypted channel. Through this last week, we focused on looking at padding oracle attacks which take advantage of the padding on the respective blocks in a CBC chain as they are passed from operation to operation. With the last word oracle and the BEAST attack, we saw how important this padding was to the security of the whole operation. With our look at Lucky 13, we were able to see that people were able to exploit the fact that one extra compression had to be done in certain situations to glean information about the message. As such, we see just from the padding, we have so many attacks.

There are so many aspects to SSL/TLS protocols that so many more exploits exist. So, what are ways that we can prevent these attacks? With the padding attacks, we saw that they tried standardizing error messages (but, why not just encrypt the message and send it back?). Should our strategy just be to move as quickly to the newest version of the security protocols? Should we add the MAC to the messages after encryption?

TLS 1.3 (the most recent release) has been drafted (and in the process of release) and has resolved many of these issues that have exploited weaknesses present in older versions of the protocol. However, its adoption rate has been very low and so it is important to bring this up as more and more operations should be moved over to TLS 1.3, as this seems to be the most secure system we have available right now and thus should be adopted.

Sources

https://tlseminar.github.io/docs/analysisssl3.pdf

http://www.iacr.org/cryptodb/archive/2002/EUROCRYPT/2850/2850.pdf

https://tlseminar.github.io/docs/beast.pdf

http://www.isg.rhul.ac.uk/tls/TLStiming.pdf

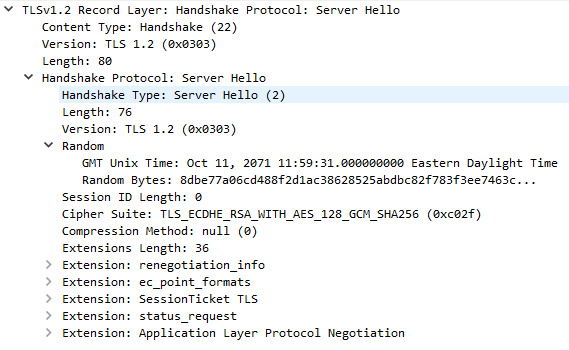

The First Few Milliseconds of an TLS 1.2 Connection

Intro

In 2009, Jeff Moser published an excellent article on the first few milliseconds of an HTTP request. It described in detail how TLS 1.0 connections are established, including a great description of RSA. We’ve attempted to build and adapt upon that article here by describing how the process works for a TLS 1.2 connection. As of January 2nd 2017, TLS 1.2 has roughly 83.2% adoption among top websites, so now is a great time to dive in.

The process of conecting via TLS 1.2 begins when the user attempts to navigate to a website. For this description, we are attempting to navigate to https://example.com/

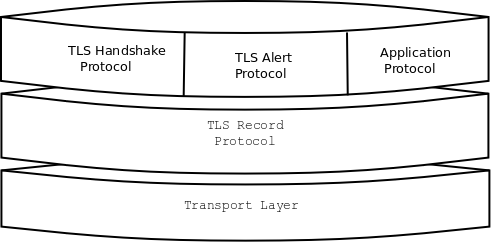

TLS Layers

The TLS protocol is composed of several layers, which come together to form each request. Here are descriptions of the common layers

Transport Layer - The protocol over which TLS data is distributed. For HTTPS, this will be TCP. Needs only to be reliable (packet loss must be handled). Not a direct part of TLS.

Record Layer - The record layer handles sending/receiving TLS messages, including data fragmentation for packets, (optional and bad) compression, and encryption.

The next three are common protocols that operate within the body of the Record Layer. TLS extensions can specify additional protocols.

Handshake Protocol - Responsible for choosing a cipher suite, connection parameters, and coordinating a shared secret.

Alert Procotol - Used to communicate warnings and errors. The most common alerts are for an invalid server certificate or to signal the end of a TLS connection when the client exits.

Application Protocol - Raw higher-level application data transmitted by TLS. For us, this is HTTP.

Now, onto the first few milliseconds of a TLSv1.2 request!

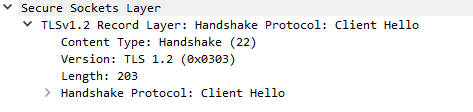

Part 0: The Record Layer

Since the following packets will be wrapped in a Record Layer struct, it’s worth describing that here.

The record packet specifies the Content Type of the request, the TLS version, data length, and then the content data (in this image, a handshake clienthello).

Note that the version specified in the record layer is often different from that specified in the handshake. This is for compatibility with some old TLS/SSL servers. You will often see the version here specified as TLS 1.0 (0x0103, or SSL 3.0)

Part 1: Client Hello

Our web browser (Microsoft Edge 38.14393.0.0 on Windows 10) will begin the TLS 1.2 handshake with a ClientHello record.

We can see several important fields here worth mentioning. First, the time (GMT seconds since midnight Jan 1, 1970) and random bytes are included. This will be used later in the protocol to generate our symmetric encryption key.

The client can send an optional session ID (not sent in this case) to quickly resume a previous TLS connection and skip portions of the TLS handshake.

Arguably the most important part of the ClientHello message is the list of cipher suites, which dictate the key exchange algorithm, bulk encryption algorithm (with key length), MAC, and a psuedo-random function. The list should be ordered by client preference. The collection of these choices is a “cipher suite”, and the server is responsible for choosing a secure one it supports, or return an error if it doesn’t support any.

The final field specified in the specification is for compression methods. However, secure clients will advertise that they do not support compression (by passing “null” as the only algorithm) to avoid the CRIME attack.

Finally, the ClientHello can have a number of different extensions. A common one is server_name, which specifies the hostname the connection is meant for, so webservers hosting multiple sites can present the correct certificate.

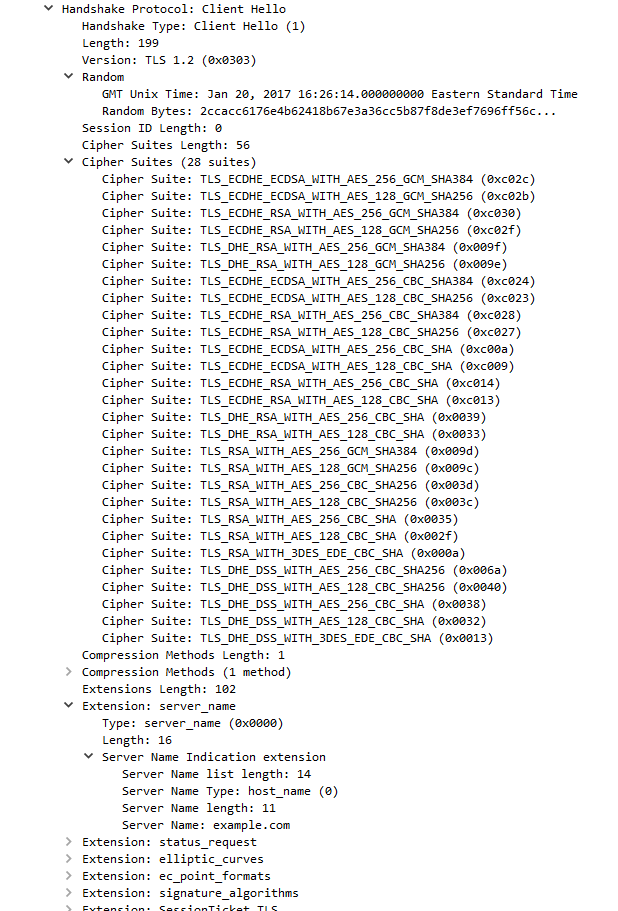

Server Hello

Once the server has processed our ClientHello, it will respond with a TLSv1.2 ServerHello message

This message returns several important fields, beginning with the TLS version to be used, usually the highest version supported by both client and server. It also includes the server time (not sure why example.com is so off!) and 28 random bytes for use later.

The server also makes a cipher suite selection from the list chosen by the client. This should be the strongest suite supported by both. In our case, the server has chosen TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256, indicating the following: * Key Exchange: Elliptic curve diffie-hellman, signed with RSA * Encryption: AES in GCM mode with 128 bit keys * MAC: SHA256

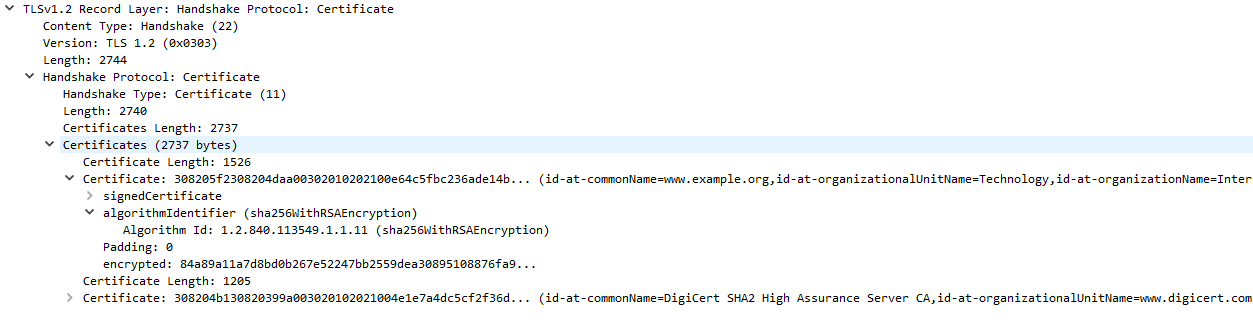

Certificate

After the ServerHello, the server will send a certificate chain to the client if applicable.

Each certificate comes with information about domains it supports, who it was issued by, and the time period (start and end) of its validity.

The certificate chain is a list of certificates beginning with the TLS certificate for the current domain, and ending in a root certificate that is built-in to the web browser. Each certificate is signed by the certificate above it in the chain, and it is this chain that the client validates to verify the server.

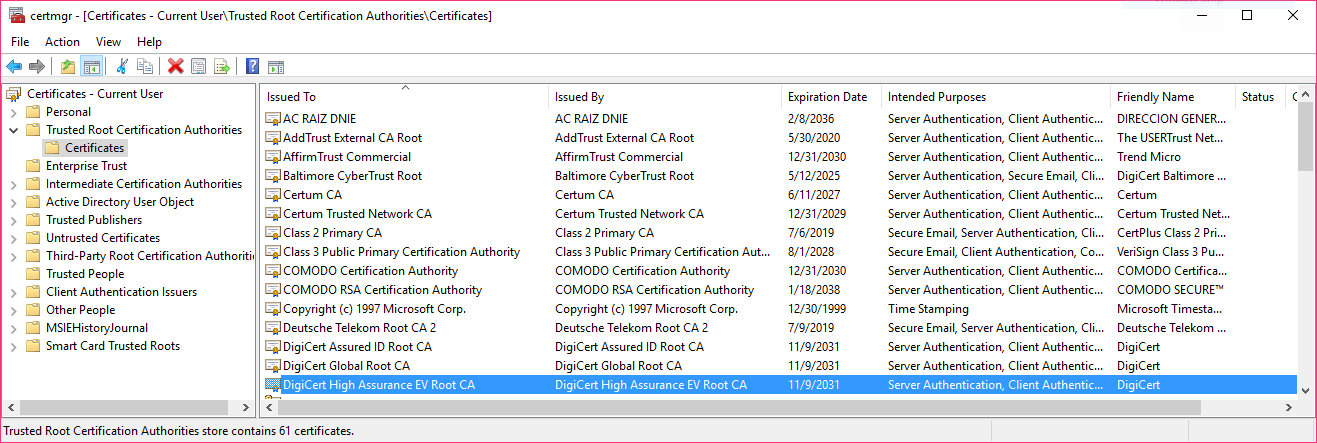

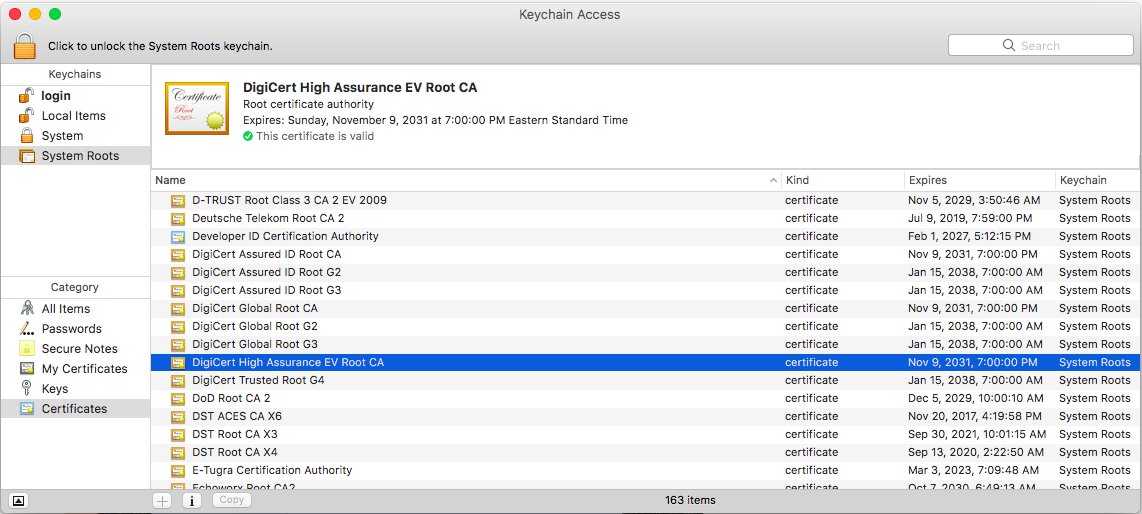

In our case, example.com has a certificate issued by “DigiCert SHA2 High Assurance”, which in turn is issued by the root certificate “DigiCert High Assurance EV Root CA.” On Windows, you can view the list of root certificates on your system with certmgr.msc

We can see the DigiCert High Assurance EV Root CA in our store, along with several other DigiCert certificates. OSX allows you to see root certificates using the “Keychain Access” program, where they are listed under “System Roots”

In general, browsers will defer to the operating system root certificates as the central store for their validation. One notable exception is Firefox, which uses its own certificate store and ignores system root certificates by default.

Root CA certificates are implicitly trusted by every system they’re included on. An attacker who manages to control their private key could impersonate any website without raising any red flags, so it’s important that the certificate authorities keep them safe (but that doesn’t always happen).

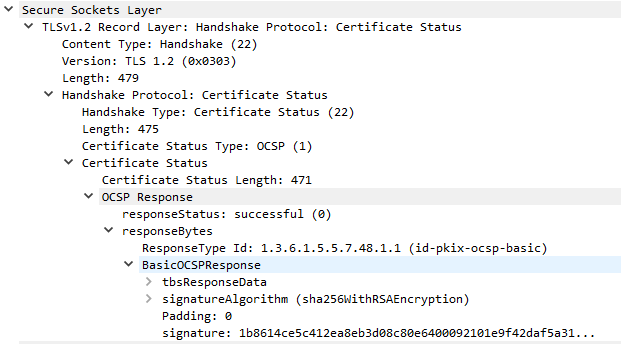

Optional: Certificate Status (OCSP)

One increasingly common extension is the Online Certificate Status Protocol (OCSP), used for certificate revocations. OCSP servers can be consulted by clients to check if the server certificate has been revoked, which helps solve a critical problem with TLS certificates. Servers response to certificate requests by issuing a signed response from the CA with a status code indicating whether or not the certificate is valid.

Prior to wide deployment of OCSP, TLS vendors shipped certificate revocation lists (CRLs) that contained serial numbers of revoked certificates.

To reduce load, servers often cache the OCSP response and send it as a Certificate Status TLS message (OCSP stapling). This helps reduce load on the OCSP system and protects attackers from analyzing OCSP requests to determine client browsing habits. The server will send this cached message in response to a status_request extension in the ClientHello message.

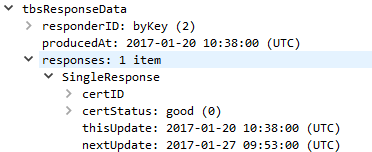

Each OCSP ticket is signed by a trusted OCSP server. The response itself consists of a responseStatus and optional responseBytes with additional information.

In our case, the OCSP ticket is valid and cached for 7 days (1/20 to 1/27). The server itself is responsible for refreshing its OCSP ticket at that time.

ServerKeyExchange

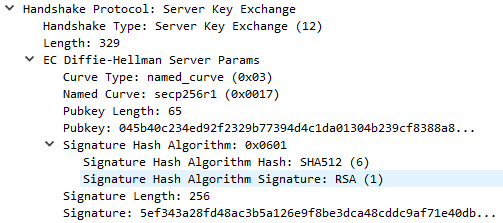

For DHE key-exchanges (DHE_DSS, DHE_RSA, and DH_anon), the server will use a ServerKeyExchange message to specify the parameters for the algorithm.

The server has specified a named_curve curve type using the secp256r1 elliptic curve (also known as P-256 or prime256v1). This is a public NIST standard curve. With knowledge of the curve to be used, both the server and client will know the crucial $p$ and $G$ values for ECDHE. For secp256r1, these are defined by NIST as:

p = FFFFFFFF 00000001 00000000 00000000 00000000 FFFFFFFF FFFFFFFF FFFFFFFF

G = 03 6B17D1F2 E12C4247 F8BCE6E5 63A440F2 77037D81 2DEB33A0 F4A13945 D898C296

The server will choose a random private key and compute $a*G$ as its public key. In addition to this it also signs the data with its private key - signing SHA256(client_random + server_random + server_params)

Server Hello Done

This short message from the server tells the client it’s done sending information. Nothing more.

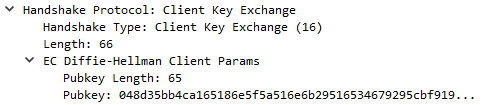

ClientKeyExchange

Now, the client must send its own ephemeral public key (for DH).

This is calculated by generating a random private key $b$ and from there calculating $b*G$ as its public key.

Completing the Key Exchange

Now that the client has $a*G$ and the server $b*G$, both can calculate the final secret value $a*b*G$ with their own private keys. This is known as the pre-master secret. The key detail here is that calculating $a*b*G$ from $a*G$ and $b*G$ alone is computationally difficult.

We just have one final step to convert our pre-master secret into the final master secret. We will use the random bytes generated by the client and server earlier along with our chosen psuedo-random function. For us, that was SHA-256.

master_secret = PRF(pre_master_secret, "master secret", client_random + server_random)[0..47]

The TLS 1.2 spec defines the PRF as PRF(secret, label, seed) which expands to P_SHA256(secret, label + seed). The label is the literal ASCII string “master secret” without any null terminator. This expands to the following definition: