Cloudflare Leak

The Cloudflare Leak

How the Incident Developed

Cloudflare is an Internet infrastructure company that provides security and performance services to millions of websites. On February 17th, 2017, Travis Ormandy, a security researcher from Google’s Project Zero, noticed that some HTTP requests running through Cloudflare were returning corrupted web pages.

https://twitter.com/taviso/status/832744397800214528

The problem that Travis noticed was that under certain circumstances, when the Cloudflare “edge servers” returned a web page, they were going past the end of a buffer and adding to the HTML dumps of memory that contained information such as auth tokens, HTTP POST bodies, HTTP cookies, and other private information [1]. To make matters worse, search engines both indexed and cached this data such that it was for a while searchable.

http://pastebin.com/AKEFci31

Since the discovery of the bug, Cloudflare worked with Google and other search engines to remove affected the cached pages.

The Impact

Data could have been leaking as early as September 22nd, but Cloudflare reported that the period of highest impact was from February 13th through February 18th with around 1 in every 3,300,000 HTTP requests have a potential memory leakage [1]. It is difficult to assess how much data was leaked, especially since the corrupted results and their cached versions were quickly removed from search engines, but Wired reported that data from large companies such as Fitbit, Uber, and OKCupid was found in the corrupted pages of a set of affected web pages.

Cloudflare asserts that the leak did not reveal any private keys, and even though other sensitive information was leaked, it did not appear in the HTML content of particularly high-traffic sites, so the damage was mitigated.

Overall, about 3000 customer’s sites triggered the bug, but, as previously noted, the data leaked could have come from any other Cloudflare customer. Cloudflare is aware of about 150 customers who were affected in that way.

The Bug Itself

As mentioned earlier, the problem resulted from a buffer being overrun and thus additional data from memory being written to the HTML of web pages. But how did this happen, and why did it happen now?

Some of Cloudflare’s services rely on modifying, or “rewriting,” HTML pages as they are routed through the edge servers. In order to do this rewriting, Cloudflare reads and parses the HTML to find elements that require changing. Cloudflare used to use a HTML parser written using a project called Ragel that converts a description of a regular language into a finite state machine. However, about a year ago they decided that the Ragel-based parser was a source of technical debt and wrote a new parser called cf-html to replace it [1].

Cloudflare first rolled this new parser our for their Automatic HTTP Rewrites service and have since been slowly migrating other services away from the Ragel-based parser. In order to use these parsers, Cloudflare adds them as a module to NGINX, a load-balancer [1].

As it turned out, the parser that was written with Ragel actually had a bug in it for several years, but there was no memory leak because of a certain configuration fo the internal NGINX buffers. When cf-html was adopted, the buffers slightly changed, enabling the leakage.

The actual bug was caused by what you might expect: a pointer error in the C code generated by Ragel (but the bug was not the fault of Ragel).

https://blog.cloudflare.com/incident-report-on-memory-leak-caused-by-cloudflare-parser-bug/

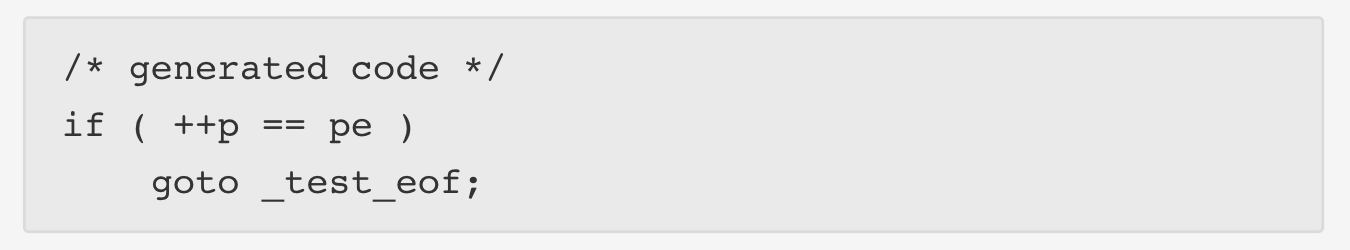

As can be guessed from this snippit, the cause of the bug was that the check for the end of the bugger was done using the equality operator, ==, instead of >=, which would have caught the bug. That snippit is the generated code. Let’s look at the code that generated that.

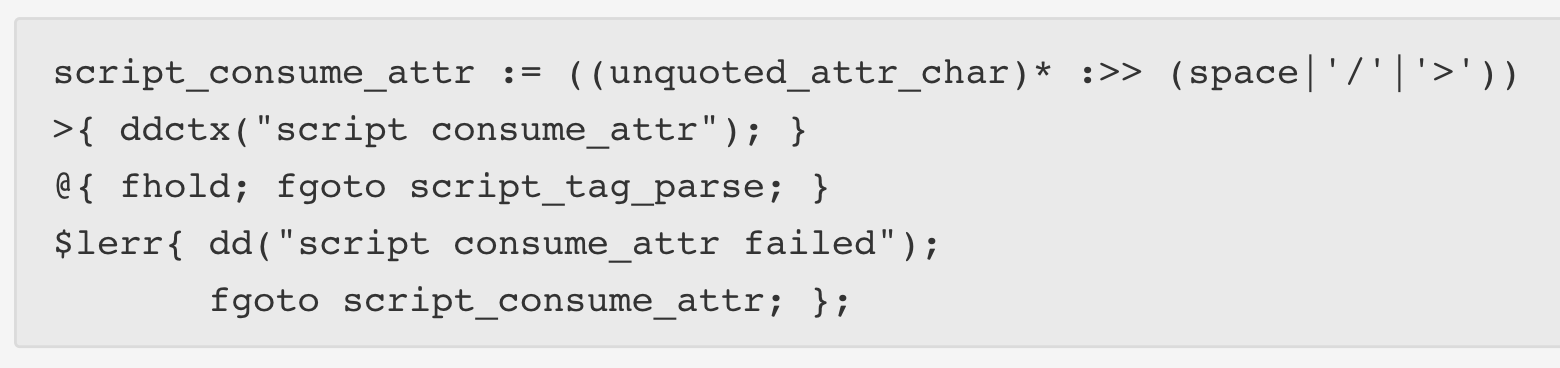

In order to check for a the end of the buffer when parsing a <script> tag, this piece of code was used:

https://blog.cloudflare.com/incident-report-on-memory-leak-caused-by-cloudflare-parser-bug/

What it means is that in order to parse the end of the tag, zero or more unquoted_attr_char are parsed followed by whitespace, /, or > signifying the end of the tag. If there is nothing wrong with the script tag, the parser will move to the code in the @{ }. If there is a problem, the parser will move to the $lerr{ } section.

The bug was caused if a web page ended with a malformed HTML tag such as <script type=. The parser would transition to dd("script consume_attr failed") which is just print debug output, but then instead of failing, it transitions to fgoto script_consume_attr;, which means it tries to parse another attribute.

Notice that the @{ } block has a fhold while the $lerr{ } block does not. It was the lack of the fhold in the second block that caused the leak. In the generated code, fhold is equivalent to p-- and thus if the malformed tag error happens at the end of the buffer, then p will actually be after the end of the document and the check for the end of the buffer will fail, causing p to overrun the buffer.

The Response From Cloudflare

Cloudflare seems to have responded relatively well to this bug. After the bug was brought to their attention they performed an initial mitigation, which meant disabling all of the sevices that used cf_html, in 47 minutes. Luckily, Cloudlfare uses a ‘global kill’ [1] feature to enable the global disabling of any service. Since the Email Obfuscation was the main cause of the leak, it was disabled first, and then Automatic HTTPS rewrites were killed about 3 hours later. About 7 hours after the bug was detected, a fix was deployed globablly. As mentioned previously, Cloudflare also contacted search engines to get the affected pages and their cached versions removed from the web.

What was not as good about Cloudflare’s response were the lessons they said they learned in their incident report. Essentially, their lessons learned amounted to saying the bug was a corner case in an “ancient piece of software” and that they will be looking to “fuzz older software” to look for other potential problems [1]. We hope Cloudflare will take more time to look at this incident more seriously and consider systemic issues that could allow such a problem to occur and persist.

Further Thoughts

Bugs like this expose the difficulty of ensuring software correctness. It is quite unlikely that a corner case like this would have been caught by human eyes, and even a fuzzer would have had to have triggered some exceptional conditions in order to exposed the bug. On the other hand, many tools and processes exist for detecting these types of problems, and there is little excuse for not using them on security-critical software.

Reference

[1] https://blog.cloudflare.com/incident-report-on-memory-leak-caused-by-cloudflare-parser-bug/